Connecting Vision and Language: A Deep Dive into OpenAI’s CLIP

For years, the gold standard in computer vision involved training models on massive, manually labeled datasets like ImageNet. While incredibly successful, this approach has a fundamental limitation: the model’s knowledge is confined to the specific categories it was trained on. In a parallel revolution, natural language processing (NLP) models like GPT-3 moved towards pre-training on the vast, raw text of the internet, learning flexible and generalizable knowledge. The OpenAI CLIP paper asks a powerful question: can we bring the NLP pre-training paradigm to computer vision and learn from the rich, descriptive text that naturally accompanies images online?

Abstract

The Problem: The “Fixed Set” Limitation of Vision Models

The authors start by highlighting a long-standing challenge in computer vision.

State-of-the-art computer vision systems are trained to predict a fixed set of predetermined object categories. This restricted form of supervision limits their generality and usability since additional labeled data is needed to specify any other visual concept.

For years, the standard approach was to train a model on a dataset with a predefined list of categories, like the 1000 object classes in the famous ImageNet dataset. If a model was trained to recognize “cats,” “dogs,” and “cars,” it had no inherent ability to recognize a “horse” or a “bicycle.” To teach it a new concept, you had to go back, collect and label thousands of new images, and fine-tune or retrain the model. This process is expensive, slow, and fundamentally limits a model’s real world usefulness. The world is not a fixed set of 1000 categories.

The Solution: Learning from Natural Language

Instead of relying on these rigid, curated datasets, the authors propose a more natural and scalable alternative.

Learning directly from raw text about images is a promising alternative which leverages a much broader source of supervision. We demonstrate that the simple pre-training task of predicting which caption goes with which image is an efficient and scalable way to learn SOTA image representations from scratch on a dataset of 400 million (image, text) pairs collected from the internet.

This is the core idea of CLIP. The internet is filled with images, and those images are often paired with descriptive text: captions, articles, titles, etc. This text provides a rich source of information, or what the authors call supervision. Instead of teaching a model that an image maps to a single label like dog, we can teach it that an image maps to a descriptive phrase like "a photo of a golden retriever playing in the park".

By training on a massive, custom-built dataset of 400 million of these (image, text) pairs, the model learns a much more nuanced and flexible understanding of visual concepts. The goal of the training is simple: given a batch of images and a batch of captions, the model must figure out which caption correctly describes which image.

The Payoff: True Zero-Shot Transfer

This training approach unlocks the model’s most powerful capability.

After pre-training, natural language is used to reference learned visual concepts (or describe new ones) enabling zero-shot transfer of the model to downstream tasks.

Because CLIP learns to connect the content of an image to the meaning of text, you can now give it classification tasks it has never seen before, simply by describing the classes in plain English. This is called zero-shot transfer.

Imagine you want to classify photos of different dog breeds. With a traditional model, you’d need a labeled dataset of thousands of dog photos. With CLIP, you simply provide the text descriptions, like "a photo of a golden retriever", "a photo of a poodle", "a photo of a husky", and the model can instantly classify images into these new categories without seeing a single labeled example. It’s using its pre-trained knowledge to connect what it “sees” in the image to the text you provide.

The Evidence: It Actually Works

The authors back up this powerful claim with extensive testing.

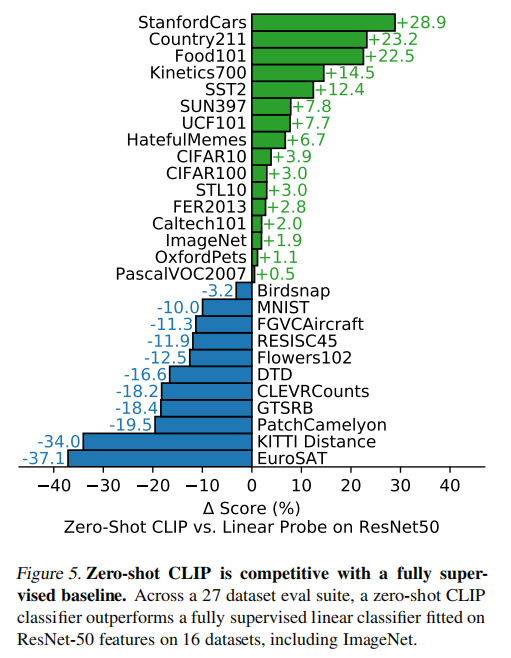

We study the performance of this approach by benchmarking on over 30 different existing computer vision datasets… For instance, we match the accuracy of the original ResNet-50 on ImageNet zero-shot without needing to use any of the 1.28 million training examples it was trained on.

This is the headline result. They tested CLIP’s zero-shot performance across a huge range of tasks, from recognizing objects and actions to reading text (OCR). The most stunning demonstration is its performance on ImageNet. Without being trained on any of the 1.28 million ImageNet training images, CLIP was able to match the accuracy of a fully-supervised ResNet-50 model that was explicitly trained on that data. This proves that learning from natural language supervision is not just a clever idea, but a highly effective method for building general-purpose visual models.

One of the most profound ideas in the CLIP paper is that the model does not have a fixed list of categories it can recognize. This is a radical departure from how most computer vision models worked before it. To truly appreciate this, let’s compare the traditional approach to CLIP’s new paradigm.

The Traditional Approach: A Built-in Classifier

Think of a classic image classification model like a ResNet-50 trained on the ImageNet dataset. Its architecture is typically composed of two main parts:

- Feature Extractor: A deep stack of convolutional layers that process an input image and convert it into a high level feature representation (essentially, a vector or list of numbers).

- Classifier Head: A final, fully connected layer at the very end of the network. For a model trained on ImageNet, this layer has exactly 1000 outputs, one for each of the 1000 specific classes in the dataset.

The model is trained to make the output corresponding to the correct class have the highest score. This structure is rigid. The model can only ever predict one of the 1000 classes it was explicitly built to recognize. If you want it to recognize a new object, you have to replace or retrain this final layer.

The CLIP Approach: A Dynamic Classifier from Language

CLIP gets rid of the fixed classifier head entirely. Instead, it learns a shared space where both images and text can coexist. It does this using two separate encoders:

- Image Encoder: Takes an image and turns it into a feature vector.

- Text Encoder: Takes a piece of text (a word, a phrase, or a sentence) and turns it into a feature vector.

The key is that both models are trained together to place the vectors for a matching (image, text) pair as close as possible in this shared space, which we can call a “multimodal embedding space”. Think of it as a universal “concept space” where visual and textual ideas that mean the same thing are placed near each other.

So, when you ask CLIP to perform a classification task, here is what happens behind the scenes:

- Step 1: Encode the Image. You feed a single image (for instance, a photo of a cat) into CLIP’s Image Encoder. The output is a single vector that numerically represents the content of the image.

- Step 2: Encode the Potential Classes. You create a list of text descriptions for all your target classes. For example:

"a photo of a dog","a photo of a car","a photo of a cat". You then feed this list into CLIP’s Text Encoder. The output is a set of vectors, one for each text description. - Step 3: Find the Best Match. CLIP then calculates the similarity (specifically, the cosine similarity) between the one image vector and every single one of the text vectors.

- Step 4: Make the Prediction. The text description whose vector is most similar to the image vector is the model’s prediction. In our example, the vector for

"a photo of a cat"would be “closest” to the image vector, making that the final classification.

The “classifier” is not a static part of the model’s architecture; it is something you create dynamically at inference time just by providing text. This is what gives CLIP its incredible flexibility. You can swap out your list of classes for any other visual concept you can describe with words, all without retraining the model. This is the essence of its powerful zero shot capability.

1. Introduction and Motivating Work

The NLP Revolution: A Blueprint for Vision

To understand the genius of CLIP, we first have to look away from computer vision and towards its sister field, Natural Language Processing. The authors of CLIP didn’t invent their core strategy from scratch; they brilliantly adapted a paradigm that had already proven phenomenally successful in the world of text.

Pre-training methods which learn directly from raw text have revolutionized NLP over the last few years (Dai & Le, 2015; Peters et al., 2018; Howard & Ruder, 2018; Radford et al., 2018; Devlin et al., 2018; Raffel et al., 2019).

The authors are referencing a seismic shift in NLP. Before this “revolution,” NLP models were often trained for a specific task (e.g., sentiment analysis) on a relatively small, task specific dataset. The breakthrough was the idea of pre-training: first, train a massive model on a gigantic corpus of raw, unlabeled text from the internet. The goal wasn’t to perform a specific task, but to learn the underlying patterns, grammar, and concepts of language itself. This pre-trained model could then be quickly adapted (or “fine-tuned”) for specific tasks with much less data and achieve state of the art results.

Task-agnostic objectives such as autoregressive and masked language modeling have scaled across many orders of magnitude in compute, model capacity, and data, steadily improving capabilities.

This sentence explains how this pre-training works. Since you don’t have explicit labels, you need a “self-supervised” or task-agnostic objective. This means the learning task is generated from the data itself, not from human labels. The two most famous objectives are:

- Autoregressive Language Modeling: This simply means “predicting the next word.” The model is given a sequence of text like “The cat sat on the” and its goal is to predict the next word, “mat”. This is the fundamental principle behind models like GPT (Generative Pre-trained Transformer).

- Masked Language Modeling (MLM): Instead of just predicting the next word, this approach takes a sentence, masks out a word (e.g., “The cat [MASK] on the mat”), and tasks the model with predicting the missing word. This forces the model to learn context from both the left and the right, and it’s the core idea behind the hugely influential BERT model.

These simple, scalable objectives allowed researchers to throw massive amounts of data and compute at their models, leading to rapid improvements.

The development of “text-to-text” as a standardized input-output interface … has enabled task-agnostic architectures to zero-shot transfer to downstream datasets removing the need for specialized output heads or dataset specific customization. Flagship systems like GPT-3 … are now competitive across many tasks with bespoke models while requiring little to no dataset specific training data.

This is the ultimate payoff of the NLP paradigm. By framing every problem as a “text-in, text-out” task, models became incredibly flexible. For example, instead of training a specialized translation model, you could just feed a model like Google’s T5 the text: "translate English to German: Hello, how are you?" and it would learn to output: "Hallo, wie geht es Ihnen?".

This flexibility, supercharged by massive scale, led to models like GPT-3. GPT-3 is a single, pre-trained model that can perform a staggering variety of tasks it was never explicitly trained for (summarization, coding, creative writing, classification) simply by being given the right text prompt. It doesn’t need specialized “output heads” (like the fixed classifier layers we discussed earlier) and requires little to no task specific training data.

In essence, the authors are setting the stage by saying: “Look at the incredible power and flexibility NLP unlocked by moving from small, labeled datasets to massive, self-supervised pre-training. We are going to do the same thing for computer vision.”

While both are massive Transformer-based models pre-trained on web-scale text, GPT-3 and T5 are built on different fundamental principles that make them better suited for different kinds of tasks.

At a high level, you can think of the difference with this analogy:

- GPT-3 is a brilliant autocomplete. It is an expert at continuing a piece of text. Its core strength is open-ended generation.

- T5 is a universal translator. It is an expert at transforming an input text into a desired output text. Its core strength is transformation and structured tasks.

Let’s look at the key technical differences that lead to this behavior.

1. Training Objective: The Core Task

This is the most important distinction.

GPT-3 (Autoregressive): As we discussed, GPT-3 is trained on an autoregressive objective, which simply means “predict the next word.” It reads text from left to right and learns to predict the most probable next token given the preceding context. It never sees “the future”; it only ever looks backward.

T5 (Text-to-Text Denoising): T5 (Text-to-Text Transfer Transformer) is trained on a “fill-in-the-blank” objective, inspired by BERT’s Masked Language Modeling. During pre-training, it takes a clean sentence, randomly “corrupts” it by masking out spans of text, and is then asked to reconstruct the original, uncorrupted text.

For example:

- Original:

Thank you for inviting me to the party last week. - Corrupted Input to T5:

Thank you <X> me to the party <Y> week. - T5’s Target Output:

<X> for inviting <Y> last

This “denoising” objective forces the model to become very good at understanding the full context of a sentence to fill in the missing parts. This makes it a natural fit for tasks that require transforming an input into an output.

- Original:

2. Model Architecture

Their training objectives lead to different choices in the underlying Transformer architecture.

GPT-3 (Decoder-Only): Because its only job is to generate the next word based on past context, GPT-3 uses only the decoder blocks from the original Transformer architecture. The decoder’s “masked self-attention” mechanism is perfectly suited for this, as it ensures that when predicting a word, the model can only attend to the words that came before it.

T5 (Encoder-Decoder): T5 uses the full encoder-decoder architecture from the original Transformer.

- The Encoder reads the entire corrupted input sequence at once (e.g.,

Thank you <X> me to the party...). This allows it to build a complete, bidirectional understanding of the context. - The Decoder then takes the encoder’s representation and generates the target output (e.g.,

<X> for inviting...) in an autoregressive, word-by-word fashion. This structure is ideal for sequence-to-sequence tasks like translation and summarization.

- The Encoder reads the entire corrupted input sequence at once (e.g.,

3. Typical Use Case

Their design differences make them shine in different scenarios.

- GPT-3 is best for:

- Open-ended generation: Creative writing, brainstorming, writing code, creating long-form content.

- Few-shot prompting: Its massive scale gives it an incredible ability to perform tasks just by seeing a few examples in the prompt, without any retraining.

- Chatbots and conversational AI.

- T5 is best for:

- Transformation tasks: Summarization (long text in, short text out), translation (English in, German out), question answering (question in, answer out).

- Fine-tuning: It serves as a powerful, general-purpose base model that is explicitly designed to be fine-tuned on specific datasets to become an expert at a particular transformation task.

Summary Table

| Feature | GPT-3 (and GPT family) | T5 (and BERT-style models) |

|---|---|---|

| Primary Goal | Generation | Transformation |

| Training Objective | Autoregressive (Predict next word) | Denoising (Fill in the blanks) |

| Architecture | Decoder-Only | Encoder-Decoder |

| Data Flow | Unidirectional (looks at past context) | Bidirectional (looks at all context) |

| Best For | Creative writing, few-shot prompting | Summarization, translation, fine-tuning |

The CLIP paper references the innovations from both of these camps. It takes the idea of massive scale and flexible, zero-shot transfer from the GPT world and applies it to a task that is conceptually more like a transformation (image in, text description out).

This is a brilliant question that highlights a key development in modern AI. The base GPT-3 model is, at its core, an autoregressive, “next-word predictor.” In contrast, models like T5 are built with an encoder-decoder structure that is a more natural architectural fit for summarization. So how can ChatGPT, which is based on the GPT architecture, be so good at it?

The answer lies in two concepts: emergent abilities from scale and a powerful fine-tuning process called instruction tuning (and RLHF).

1. Emergent Abilities from Scale

A base GPT model is trained on a simple goal: predict the next word. But to get really good at this task across a dataset as vast and diverse as the internet, the model cannot simply memorize sequences. It is forced to build a deep, internal understanding of language and the world. It must learn:

- Grammar and Syntax: The rules of language.

- Semantic Concepts: The meaning of words and how they relate (e.g., that “king” - “man” + “woman” is close to “queen”).

- Factual Knowledge: Information about people, places, and events.

- Context and Cohesion: How sentences and paragraphs logically follow each other.

To accurately predict the next word of a complex article, the model must implicitly keep track of the article’s main topic and key points. In a sense, the ability to “understand” for the purpose of summarization is an emergent property that arises as a side effect of getting extremely good at next-word prediction at a massive scale.

The base model saw countless examples of articles followed by abstracts or summaries in its training data. So, if you prompt it correctly (e.g., by providing an article followed by TL;DR:), it recognizes this pattern and knows that the most probable “next words” are a condensed version of the preceding text. It’s completing a pattern it has learned.

2. Instruction Tuning and RLHF: The Secret Sauce of ChatGPT

This is the most critical factor. ChatGPT is not the base GPT-3 model. It is a variant that has gone through an extensive, second phase of training designed specifically to make it a helpful assistant.

This process involves two main steps:

Instruction Tuning (Supervised Fine-Tuning): First, the base GPT model is fine-tuned on a high-quality, curated dataset of

(instruction, desired_output)pairs. These were created by human labelers. For summarization, this dataset would contain thousands of examples like:Instruction:

"Summarize the following scientific abstract for a fifth-grader: [long, complex abstract text]"Desired Output:

"[simple, easy-to-understand summary]"By training on millions of such instructions across thousands of different tasks, the model learns to generalize the concept of following instructions, not just completing patterns.

Reinforcement Learning from Human Feedback (RLHF): This is the step that truly refines the model’s behavior. In this stage, the model generates several different responses to a single prompt (e.g., four different summaries). A human rater then ranks these responses from best to worst. This feedback is used to train a separate “reward model.” Finally, the main GPT model is fine-tuned again using reinforcement learning to maximize the score it gets from this reward model.

In simple terms, RLHF trains the model to produce outputs that humans find helpful, accurate, and well-written.

The Bottom Line

You can think of it like this:

- Base GPT-3 is like a brilliant student who has read every book in the library. They have all the knowledge, but they are not trained to apply it to specific tasks for you. They might answer your question, or they might just continue your sentence in a creative but unhelpful way.

- ChatGPT is that same brilliant student after they have completed a rigorous apprenticeship on how to be the world’s best assistant. They have been explicitly trained to understand and follow instructions, including “summarize this,” making them far more reliable and useful for such tasks.

So, while T5’s architecture is a natural fit for summarization, ChatGPT’s massive scale and, more importantly, its specialized instruction-following and RLHF training give it the powerful ability to perform this and many other structured tasks exceptionally well.

The Central Question: Can Vision Learn from NLP’s Playbook?

After establishing the success of pre-training on raw web text in NLP, the authors pivot to make their main point: computer vision has not yet embraced this paradigm, and perhaps it should.

These results suggest that the aggregate supervision accessible to modern pre-training methods within web-scale collections of text surpasses that of high-quality crowd-labeled NLP datasets.

This is a powerful claim. The authors are arguing that the sheer volume and diversity of text on the internet (the “aggregate supervision”) is a more potent teacher than smaller, meticulously human-labeled datasets. Think of it this way: a “crowd-labeled” dataset might have thousands of perfectly annotated examples for a specific task like question answering. But the internet has trillions of words discussing nearly every topic imaginable, from cooking to quantum mechanics to celebrity gossip. The authors’ claim is that the raw breadth and variety of this web-scale data provides a richer, more generalizable learning signal than any clean, but narrow, dataset ever could. Quantity and variety have a quality all their own.

Having made this point about NLP, they immediately contrast it with computer vision:

However, in other fields such as computer vision it is still standard practice to pre-train models on crowd-labeled datasets such as ImageNet (Deng et al., 2009).

This sentence sets up the central tension of the paper. While NLP has moved on to learning from the messy, vast internet, computer vision’s most foundational models are still pre-trained on datasets like ImageNet. ImageNet is a monumental achievement in data collection, consisting of over 14 million images hand-labeled by humans (via crowdsourcing platforms like Amazon Mechanical Turk) into thousands of object categories. It was the dataset that fueled the deep learning revolution.

However, from the perspective of the CLIP authors, it represents the “old” way of doing things: a finite set of categories, expensive to create, and fundamentally limited in scope compared to the near-infinite variety of visual information online.

This contrast leads them to state their research question in the clearest possible terms:

Could scalable pre-training methods which learn directly from web text result in a similar breakthrough in computer vision? Prior work is encouraging.

This is it. This is the thesis of the entire paper. The authors are proposing to directly apply the successful NLP blueprint to the field of computer vision. They are asking: what happens if we stop training vision models on fixed sets of categories and instead train them to connect images to the raw, natural language that accompanies them all over the internet?

The final sentence, “Prior work is encouraging,” is a deliberate and important piece of scientific storytelling. They are signaling that while their approach is ambitious, they are not the first to have this idea. They are building on a history of prior research, which they will now use to motivate their specific approach.

Standing on the Shoulders of Giants: A History of Vision-Language Models

The idea of teaching a computer vision system using natural language is not new. In this section, the authors take us on a two-decade tour of the research that paved the way for CLIP, showing a clear evolution from simple ideas to the sophisticated techniques that made their breakthrough possible.

The Early Pioneers

The journey starts over 20 years ago, demonstrating just how long researchers have been chasing this goal.

Over 20 years ago Mori et al. (1999) explored improving content based image retrieval by training a model to predict the nouns and adjectives in text documents paired with images.

This is the foundational concept in its simplest form. “Content-based image retrieval” is the task of finding similar images to a query image. Mori et al. realized that the text accompanying an image (like in an article) provides valuable clues. By training a model to associate parts of an image with specific words (nouns and adjectives), they could improve their system. This early work established the core principle: text paired with images is a powerful source of supervision.

The idea continued to evolve with increasing sophistication through the 2000s and early 2010s with work from Quattoni et al. (2007) and Srivastava & Salakhutdinov (2012), who explored more advanced techniques for learning “deep representations” from multimodal (i.e., multiple types of data, like text and images) features.

The Modern Era: CNNs Meet Text

The real acceleration began when modern deep learning architectures, specifically Convolutional Neural Networks (CNNs), were applied to the problem.

Joulin et al. (2016) modernized this line of work and demonstrated that CNNs trained to predict words in image captions learn useful image representations. They converted the title, description, and hashtag metadata of images in the YFCC100M dataset … into a bag-of-words multi-label classification task and showed that pre-training AlexNet … learned representations which preformed similarly to ImageNet-based pre-training on transfer tasks.

This was a major milestone. Joulin et al. took a large dataset of images from Flickr, each with associated text (titles, hashtags, etc.). They treated this as a massive classification problem using a bag-of-words (BoW) approach.

- Bag-of-Words (BoW): This is a simple way to represent text. Imagine you take a sentence, throw all the words into a bag, and shake it up. You ignore grammar and word order, and just count the occurrences of each word. The model’s task was to look at an image and predict the “bag of words” that appeared in its description.

The crucial finding was that an AlexNet model (the CNN that kicked off the deep learning boom in 2012) pre-trained this way learned visual features that were just as useful as those learned from the meticulously-labeled ImageNet dataset. This was strong evidence that learning from messy, real-world text could compete with learning from clean, human-labeled categories.

Li et al. (2017) then extended this approach to predicting phrase n-grams in addition to individual words and demonstrated the ability of their system to zero-shot transfer to other image classification datasets…

Li et al. took the next logical step. Instead of just predicting individual words (1-grams), they trained their model to predict n-grams (sequences of n words). For example, instead of predicting “golden” and “retriever” separately, the model could predict the 2-gram “golden retriever.” This captures more meaning. More importantly, they were one of the first to show that this approach could enable zero-shot transfer. They could create a classifier for new, unseen categories just by describing them with text. While the performance was low (as the paper later notes), it was a critical proof of concept.

The Immediate Predecessors

The final pieces of the puzzle came from very recent work that incorporated the latest NLP architectures and training techniques.

Adopting more recent architectures and pre-training approaches, VirTex (Desai & Johnson, 2020), ICMLM (Bulent Sariyildiz et al., 2020), and ConVIRT (Zhang et al., 2020) have recently demonstrated the potential of transformer-based language modeling, masked language modeling, and contrastive objectives to learn image representations from text.

These papers, published just before CLIP, brought the vision-language field right up to the cutting edge. They incorporated ideas from the NLP revolution we discussed earlier, like using powerful Transformer models to understand the text.

Most importantly, they explored contrastive objectives. This is a key concept for understanding CLIP.

- Contrastive Objectives: Instead of a predictive task (e.g., “predict the exact words in this caption”), a contrastive task is a matching task. The model is given an image, one correct text caption, and several incorrect captions. Its only job is to learn which text is the correct match. It learns to pull the representations of the correct (image, text) pair together in its embedding space, while pushing the representations of incorrect pairs far apart. This is often a much more efficient and robust learning signal than trying to predict every single word correctly.

These papers served as the final “proofs of concept,” showing that combining modern architectures with a contrastive learning objective was a promising path forward. The stage was now set for the CLIP authors to ask: what happens if we take this exact approach and scale it up… way up?

The Performance Gap and the “Pragmatic Middle Ground”

If learning from natural language is such a great idea, why wasn’t everyone already doing it? The authors directly address this by pointing to a simple, unavoidable fact: the performance just wasn’t good enough.

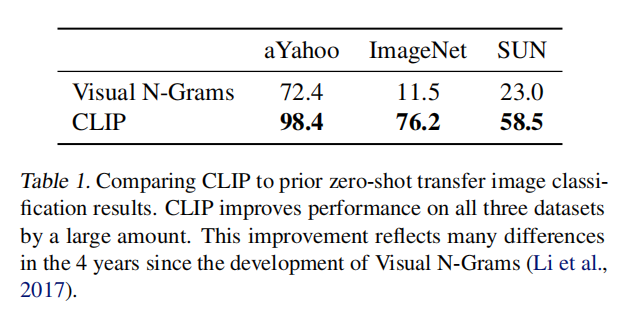

While exciting as proofs of concept, using natural language supervision for image representation learning is still rare. This is likely because demonstrated performance on common benchmarks is much lower than alternative approaches. For example, Li et al. (2017) reach only 11.5% accuracy on ImageNet in a zero-shot setting.

This is the sober reality check. The earlier work by Li et al. was a fantastic proof of concept for zero-shot transfer, but an 11.5% accuracy on ImageNet is, to put it bluntly, terrible. The authors drive this point home by providing two stark comparisons:

- It was far below the 88.4% accuracy of the state-of-the-art models at the time.

- It was even worse than the 50% accuracy of “classic” (pre-deep learning) computer vision methods from nearly a decade prior.

With such poor performance, it’s no wonder that this approach remained a niche research area rather than a mainstream technique. The promise of flexibility was overshadowed by the reality of poor results.

However, this didn’t stop researchers from using large-scale, internet-style data. Instead, they found a successful “middle ground” by using a more targeted, albeit less flexible, form of supervision.

Instead, more narrowly scoped but well-targeted uses of weak supervision have improved performance. Mahajan et al. (2018) showed that predicting ImageNet-related hashtags on Instagram images is an effective pre-training task.

This introduces a key concept: weak supervision. This term refers to using labels that are noisy, imprecise, or not perfectly curated, in contrast to the “gold-standard” clean labels of a dataset like ImageNet. The key insight from Mahajan et al. was to leverage this at a massive scale.

- What they did: They trained a model on billions of public Instagram images. The “label” for each image was simply the set of hashtags its user had applied.

- Why it’s “weak”: Hashtags are very noisy. An image of a cat at the beach might be tagged with

#cat,#beach,#sunset, and#vacation. - Why it’s “well-targeted”: Crucially, they filtered the hashtags to only include those relevant to the 1000 ImageNet classes.

- The Result: This was an incredibly effective pre-training strategy. A model pre-trained on these noisy hashtags and then fine-tuned on ImageNet achieved a new state-of-the-art accuracy, boosting performance by over 5%.

This was followed by similar work that further validated the approach:

Kolesnikov et al. (2019) and Dosovitskiy et al. (2020) have also demonstrated large gains on a broader set of transfer benchmarks by pre-training models to predict the classes of the noisily labeled JFT-300M dataset.

This work used JFT-300M, a massive internal Google dataset with 300 million images and noisy, automatically generated labels for thousands of classes. Just like the Instagram work, pre-training on this huge, “weakly” labeled dataset before fine-tuning on smaller, clean datasets led to huge performance gains.

So, the authors have established a clear story:

- The dream of learning from general, arbitrary text (like captions) was exciting but performed poorly.

- The pragmatic approach of learning from massive but targeted weak labels (like hashtags or noisy class labels) was a huge success for pre-training.

This sets the stage for the authors to critique this successful “middle ground” and introduce their own approach as the true solution.

The Problem with the Middle Ground: A Critique of Weak Supervision

The successful pre-training methods from Instagram and Google (JFT-300M) represented a huge step forward. The authors acknowledge this, calling it the “pragmatic middle ground.”

This line of work represents the current pragmatic middle ground between learning from a limited amount of supervised “gold-labels” and learning from practically unlimited amounts of raw text.

Think of it as a spectrum of supervision:

- One Extreme: A small set of high-quality, human-verified “gold labels” (e.g., ImageNet).

- The Other Extreme: A nearly infinite amount of messy, unstructured, raw text paired with images (the authors’ ultimate goal).

- The Middle Ground: Massive datasets of “weakly” labeled images (e.g., Instagram hashtags). This approach successfully captured the scale of the internet data but constrained the supervision to be more like traditional labels.

However, the authors argue that this pragmatic solution comes with a major compromise that limits its ultimate potential.

However, it is not without compromises. Both works carefully design, and in the process limit, their supervision to 1000 and 18291 classes respectively. Natural language is able to express, and therefore supervise, a much wider set of visual concepts through its generality.

This is the core of the critique. Even though these models were trained on billions of images, the supervision was still a fixed list of categories. It might be a much bigger list than ImageNet’s 1000 classes, but it is a list nonetheless. This fundamentally restricts what the model can learn. If a concept isn’t in your list of 18,291 target labels, the model has no way to learn it.

Natural language, by contrast, is not a list. It is a general-purpose system for describing the world. It can express an almost infinite variety of visual concepts: objects (“dog”), actions (“a dog jumping”), attributes (“a fluffy brown dog”), relationships (“a dog chasing a cat”), and abstract ideas (“a lonely dog”). This is the expressiveness and generality the authors want to capture.

The second, more technical limitation is baked into the architecture of these “middle ground” models.

Both approaches also use static softmax classifiers to perform prediction and lack a mechanism for dynamic outputs. This severely curtails their flexibility and limits their “zero-shot” capabilities.

This is a crucial technical point. Let’s break it down:

- Static Softmax Classifier: As we discussed in our earlier note, this refers to the final layer of a traditional classification model. It has a fixed number of outputs, one for each class in the predefined list. The softmax function then converts these outputs into a probability distribution over that fixed list.

- Lack of Dynamic Outputs: Because the classifier’s structure is fixed, it cannot produce outputs for new, unseen classes. It is architecturally locked into its original set of categories.

This architectural choice makes true, flexible zero-shot transfer impossible. You cannot simply give the model a new text description at test time and have it classify an image, because there is no output neuron corresponding to that new concept. The model’s knowledge is trapped behind this rigid, static classifier.

In essence, the authors are arguing that while the weak supervision approach was a powerful trick for boosting performance on existing benchmarks, it was an evolutionary dead end. To achieve their goal of a truly flexible, general-purpose vision model, they needed to abandon the fixed-classifier paradigm entirely, a problem that would require a different approach to both the data and the model.

Closing the Gap with Scale: Introducing CLIP

The authors now identify the final, crucial difference between the successful “weak supervision” models and the less successful attempts at learning from general natural language: scale.

A crucial difference between these weakly supervised models and recent explorations of learning image representations directly from natural language is scale. While Mahajan et al. (2018) and Kolesnikov et al. (2019) trained their models for accelerator years on millions to billions of images, VirTex, ICMLM, and ConVIRT trained for accelerator days on one to two hundred thousand images.

This is a critical insight. The successful models weren’t just successful because they used targeted hashtags; they were successful because they were trained on an absolutely colossal scale.

- Weak Supervision Models (Instagram, JFT-300M): Trained on billions of images, requiring accelerator-years of compute. An “accelerator-year” is the equivalent of running a single high-end GPU or TPU for a full year, 24/7.

- Natural Language Supervision Models (VirTex, etc.): Trained on only hundreds of thousands of images, requiring only accelerator-days of compute.

The authors are hypothesizing that the previous attempts at learning from general language failed not because the idea was wrong, but because they were orders of magnitude too small. The rich, noisy, and complex signal of natural language might require a massive amount of data to work effectively.

This leads directly to their own contribution.

In this work, we close this gap and study the behaviors of image classifiers trained with natural language supervision at large scale. Enabled by the large amounts of publicly available data of this form on the internet, we create a new dataset of 400 million (image, text) pairs and demonstrate that a simplified version of ConVIRT trained from scratch, which we call CLIP, for Contrastive Language-Image Pre-training, is an efficient method of learning from natural language supervision.

Here, they lay out the core components of their work:

- Close the Scale Gap: They are the first to attempt training with general natural language supervision at the same massive scale as the successful weak supervision methods.

- A New Dataset: To do this, they had to build their own dataset from scratch, collecting 400 million image-text pairs from the internet. This is a monumental engineering effort and a key contribution in itself.

- The Method (CLIP): They name their model CLIP, which stands for Contrastive Language-Image Pre-training. They explicitly state it’s a simplified version of the ConVIRT model (one of the immediate predecessors they mentioned), which uses the efficient and robust contrastive learning objective we discussed earlier.

Finally, they summarize the key findings they will present in the rest of the paper.

We study the scalability of CLIP by training a series of eight models… and observe that transfer performance is a smoothly predictable function of compute… We find that CLIP, similar to the GPT family, learns to perform a wide set of tasks during pre-training including OCR, geo-localization, action recognition, and many others. We measure this by benchmarking the zero-shot transfer performance of CLIP on over 30 existing datasets…

This is the roadmap for the rest of the paper. They will show that:

- CLIP’s performance scales predictably with model size and compute, just like the GPT models did for NLP. This is a strong sign that the approach is robust and has not yet hit its limits.

- During pre-training, CLIP learns a surprising variety of skills beyond simple object recognition.

- They will prove its capabilities by testing its zero-shot transfer performance on a very broad and diverse set of over 30 benchmarks.

This paragraph perfectly concludes the setup and transitions us into the main body of the paper, where the authors will provide the evidence to back up these claims.

2. Approach

2.1 Natural Language Supervision

At the heart of CLIP is a single, powerful idea that serves as the foundation for all the technical details that follow.

At the core of our approach is the idea of learning perception from supervision contained in natural language.

This is their guiding principle. Instead of learning from predefined class labels like class_id: 7 (which a file might later map to “car”), the model learns directly from the raw text that humans use to describe the world: “a photo of a blue car parked on the street.”

A Tangle of Terminology

The authors first take a moment to clear up some confusion. The field of machine learning has many different terms for training without clean, human-provided labels, and the lines can get blurry.

…terminology used to describe work in this space is varied, even seemingly contradictory… [approaches are described] as unsupervised, self-supervised, weakly supervised, and supervised respectively.

Imagine one paper scrapes image-caption pairs and calls the method “supervised” because the captions are technically labels. Another paper might do the same but call it “weakly supervised” because the captions are noisy. A third might call it “self-supervised” because the labels come from the data itself.

The authors of CLIP argue that these distinctions miss the point. The important, common thread is not the specific implementation, but the source of the training signal. To unify this, they propose their own term: Natural Language Supervision.

We emphasize that what is common across this line of work is not any of the details of the particular methods used but the appreciation of natural language as a training signal. All these approaches are learning from natural language supervision.

This is a key contribution in itself. They are giving a name to the paradigm they are championing, framing it as the defining characteristic of this entire line of research.

Why Natural Language Supervision?

The authors argue that this approach has two game-changing advantages over other methods, from traditional supervised learning to even other forms of self-supervision.

1. It’s Immensely Scalable.

Creating a high-quality, labeled dataset like ImageNet is a monumental undertaking. It requires thousands of hours of human labor to manually classify millions of images, often through a voting process to create a single, canonical “gold label” for each image. This process is slow, expensive, and doesn’t scale easily.

It’s much easier to scale natural language supervision compared to standard crowd-sourced labeling for image classification since it does not require annotations to be in a classic “machine learning compatible format”… Instead, methods which work on natural language can learn passively from the supervision contained in the vast amount of text on the internet.

In contrast, the internet is already filled with billions of images that are naturally paired with text. Scraping this data is an engineering challenge, but it’s a process that can be automated and scaled far more easily than manual labeling.

2. It Enables Flexible Zero-Shot Transfer.

This is the most critical advantage and what truly sets CLIP’s approach apart. Many self-supervised methods are excellent at learning powerful image features. For example, a model might learn to recognize that two different, augmented views of the same cat image should have a similar feature representation.

The problem? That representation is just a vector of numbers. It has no inherent connection to human concepts. After pre-training, you still need a labeled dataset to train a classifier that learns to map those feature vectors to class labels like “cat” or “dog.”

Learning from natural language also has an important advantage over most unsupervised or self-supervised learning approaches in that it doesn’t “just” learn a representation but also connects that representation to language which enables flexible zero-shot transfer.

Natural Language Supervision solves this problem by design. From the very beginning, the model is forced to build a bridge between the visual world and the world of human language. It learns to create image representations that are inherently aligned with the text representations of what those images contain. This built-in connection to language is the key that unlocks CLIP’s remarkable ability to perform classification on tasks and categories it has never seen before.

2.2 Creating a Sufficiently Large Dataset

A model is only as good as the data it’s trained on. For a task as ambitious as learning general visual concepts from language, the CLIP authors needed an engine fueled by an unprecedented amount of diverse, high-quality data. In this section, they explain why existing datasets were not up to the task.

Why Existing Datasets Weren’t Enough

The authors begin by evaluating the common datasets used for this kind of vision-language research. They quickly find them lacking in one of two key areas: scale or quality.

Existing work has mainly used three datasets, MS-COCO (Lin et al., 2014), Visual Genome (Krishna et al., 2017), and YFCC100M (Thomee et al., 2016).

Let’s break down the limitations of each:

MS-COCO and Visual Genome: These are the gold standard for quality. They contain images with detailed, human-written captions describing the scene. The problem? They are tiny by modern standards, with only about 100,000 training images each. This is simply not enough data to learn the rich, generalizable representations the authors are aiming for, especially when compared to the billion-image datasets used in the “weak supervision” work.

YFCC100M: This dataset, a collection of 100 million photos from Flickr, seems to solve the scale problem. However, it fails on quality. The “text” associated with these images is often not useful natural language.

Many images use automatically generated filenames like

20160716_113957.JPGas “titles” or contain “descriptions” of camera exposure settings. After filtering to keep only images with natural language titles and/or descriptions in English, the dataset shrunk by a factor of 6 to only 15 million photos. This is approximately the same size as ImageNet.

This is the killer finding. The one public dataset that seemed large enough for the task ended up being no better than ImageNet after basic quality filtering. This neatly demonstrates the core problem: no publicly available dataset had both the massive scale and the natural language supervision required to truly test their hypothesis.

Building a New Dataset: WebImageText (WIT)

Faced with this data gap, the authors made a critical decision: they would build their own.

To address this, we constructed a new dataset of 400 million (image, text) pairs collected form a variety of publicly available sources on the Internet.

This is a monumental contribution. They created a new dataset, which they later call WebImageText (WIT), that is more than 25 times larger than the filtered YFCC100M dataset.

Crucially, they didn’t just scrape images randomly. They designed a systematic process to ensure the dataset covered a vast and diverse range of visual concepts.

To attempt to cover as broad a set of visual concepts as possible, we search for (image, text) pairs as part of the construction process whose text includes one of a set of 500,000 queries.

How did they create this list of 500,000 queries? The footnote reveals a clever and highly effective strategy:

- They started with every word that appears at least 100 times in the English Wikipedia. This provides a massive base of common and uncommon nouns, verbs, adjectives, and proper nouns.

- They augmented this list with bi-grams (two-word phrases) that are statistically significant (i.e., they appear together more often than by chance, like “San Francisco”).

- They also included the titles of all Wikipedia articles above a certain popularity threshold.

This systematic approach ensures their dataset isn’t just large but also incredibly broad, providing the rich and varied supervision needed to learn a truly general model of the visual world. Building this dataset was a foundational step that made the rest of CLIP’s success possible.

2.3 Selecting an Efficient Pre-Training Method

Having established the need for a massive dataset, the authors faced their next great challenge: how do you actually train a model on 400 million images without it taking decades? Training efficiency, they realized, was not just a convenience—it was the key to making their entire approach feasible.

State-of-the-art computer vision systems use very large amounts of compute. Mahajan et al. (2018) required 19 GPU-years to train their ResNeXt101… When considering that both these systems were trained to predict only 1000 ImageNet classes, the task of learning an open set of visual concepts from natural language seems daunting.

The authors start by framing the problem. Previous state-of-the-art models required enormous amounts of computation (e.g., 19 GPU-years) just to learn 1000 fixed categories. Their goal of learning a nearly unlimited set of concepts from noisy, complex natural language was far more ambitious. A slow, inefficient training method would be a non-starter. This led them to a crucial bake-off between three different approaches.

Attempt 1: Predictive Language Modeling (Too Slow)

Their first attempt was the most direct and, in some ways, the most intuitive. It was similar to the approach used by VirTex, one of the recent papers they cited.

Our initial approach, similar to VirTex, jointly trained an image CNN and text transformer from scratch to predict the caption of an image. However, we encountered difficulties efficiently scaling this method.

In this setup, the model would look at an image and then, using a Transformer-based language model, try to generate the exact caption associated with it, word by word.

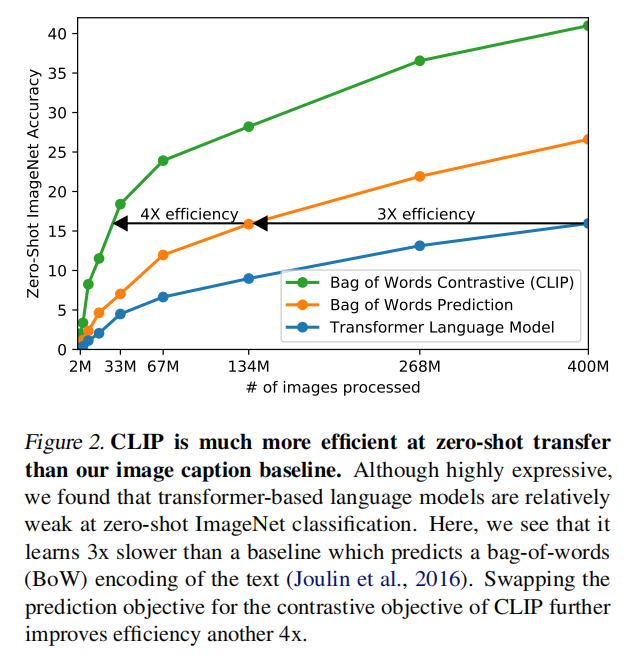

The problem? This is an incredibly difficult and unforgiving task. The text paired with an image on the internet can vary wildly. A photo of a cat might be paired with “a picture of my cat, Fluffy,” or “a cat sitting on a sofa,” or “here’s a cute animal #catsofinstagram.” Forcing the model to predict the exact sequence of words is a very high bar and, as they found, a very slow way to learn. As shown in their Figure 2, this approach was three times slower at learning to recognize ImageNet classes than a simpler baseline.

Attempt 2: Bag-of-Words Prediction (Better, But Not Enough)

The simpler baseline they compared against was the bag-of-words (BoW) approach, similar to the one used by Joulin et al. (2016). Instead of predicting the exact sentence, the model’s task was simply to predict the set of words present in the caption, ignoring grammar and word order. This is an easier task and, as expected, it was more efficient than full language modeling. However, it still wasn’t the breakthrough in efficiency they needed.

The Winner: Contrastive Learning (4x More Efficient)

This led them to their third and final approach, which solved the efficiency puzzle by reframing the problem entirely.

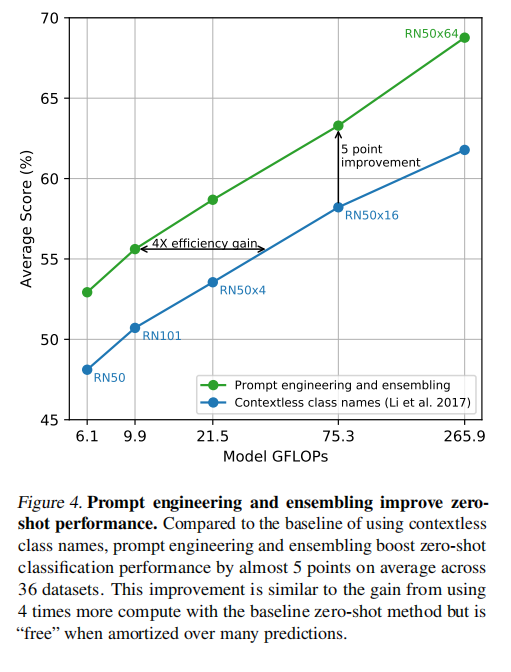

…we explored training a system to solve the potentially easier proxy task of predicting only which text as a whole is paired with which image and not the exact words of that text. … Starting with the same bag-of-words encoding baseline, we swapped the predictive objective for a contrastive objective in Figure 2 and observed a further 4x efficiency improvement…

This is the core of CLIP’s training method. Instead of predicting the caption (a generative task), they turned it into a matching task (a contrastive task). Here’s how it works:

- Take a batch of

Nimages and theirNcorresponding text captions. - This creates

Ncorrect pairs. All other combinations (N*N - Nof them) are incorrect. - The model’s goal is to learn a representation for images and text such that the similarity score for the correct pairs is high, and the similarity score for all incorrect pairs is low.

This is a much more efficient learning signal. The model doesn’t get punished for minor wording differences in a caption; it just has to learn that “a photo of a dog” is a better match for a dog image than “a photo of a cat” is. This simple change provided a massive 4x efficiency boost over the already-better BoW method. Combined with the 3x improvement over the language modeling approach, the contrastive objective was up to 12 times more efficient, making it the clear winner and the right choice for training at scale.

The CLIP Objective: A Simplified and Scalable Contrastive Loss

The paper then provides the technical details of their contrastive approach.

Given a batch of N (image, text) pairs, CLIP is trained to predict which of the N × N possible (image, text) pairings across a batch actually occurred. To do this, CLIP learns a multi-modal embedding space by jointly training an image encoder and text encoder to maximize the cosine similarity of the image and text embeddings of the N real pairs in the batch while minimizing the cosine similarity of the embeddings of the N^2 − N incorrect pairings.

This method, known formally as the InfoNCE loss, was becoming popular in self-supervised learning, and the authors adapted it for their vision-language task. They also made several key simplifications compared to other contemporary methods, demonstrating the robustness of the core idea:

- They trained the model from scratch, without using pre-trained ImageNet weights for the image encoder or a pre-trained language model for the text encoder.

- They used a simple linear projection to map the encoder outputs into the multi-modal embedding space, forgoing the more complex non-linear projection heads used by models like SimCLR.

- They used minimal data augmentation: just a single random square crop from the resized images.

- They even optimized the temperature parameter of the softmax function, a small but important hyperparameter, directly during training.

These simplifications show that the power of CLIP doesn’t come from lots of complex tricks or architectural bells and whistles. It comes from a simple, highly efficient contrastive objective applied to a massive and diverse dataset.

In the paper, the authors state they used a “simple linear projection” instead of the “more complex non-linear projection heads” used by other models like SimCLR. This might sound like technical jargon, but it’s a key architectural choice that’s worth understanding.

At its core, the issue is about how you get from the raw output of an encoder to the final space where you perform the contrastive learning (the matching game).

The Standard Approach at the Time: The Non-Linear Projection Head

In many self-supervised and contrastive learning frameworks, particularly SimCLR (a very influential paper on contrastive learning for images), the process looked like this:

- Encoder: An image goes into a powerful encoder (e.g., a ResNet) which produces a feature vector, let’s call it

h. This vectorhis the rich, general-purpose representation of the image that you want to use for downstream tasks later on. - Projection Head: The feature vector

his not used directly for the contrastive loss. Instead, it’s passed through a small neural network, typically a Multi-Layer Perceptron (MLP), called the “projection head.” This MLP transformshinto a new vector,z. - Contrastive Loss: The contrastive learning (the matching game of pulling similar things together and pushing different things apart) is performed on these final

zvectors. - Discard after Training: Crucially, after pre-training is finished, the projection head is thrown away. For any downstream task (like classification), you use the original feature vectors,

h, that came directly from the encoder.

Why do this? The theory behind SimCLR’s approach was that this separation was beneficial. The projection head’s job was to transform the features into a space where it’s easy to perform the contrastive task. This process might involve throwing away some information that’s not useful for the matching game (e.g., precise color information might be discarded if the task is just to match two augmented views of the same object).

By adding this extra step, you allow the main encoder’s representation h to remain as rich and general as possible, retaining all that potentially useful information, while the disposable projection head does the “dirty work” of preparing the features for the contrastive loss.

CLIP’s Simpler Approach: The Linear Projection

The CLIP authors decided to simplify this entire process.

- Encoder: An image (or text) goes into its respective encoder, producing a feature vector.

- Linear Projection: Instead of a multi-layer MLP, this feature vector is passed through a single, simple linear layer (essentially, just one matrix multiplication). This transforms the vector into the final representation used in the contrastive space.

What does this mean? A linear projection is much less powerful than a non-linear MLP. It can only perform basic transformations like rotating and scaling the feature space; it can’t learn complex, non-linear relationships.

By making this choice, the CLIP authors are making an implicit statement: the representations coming directly out of our encoders are already good enough. They don’t need a powerful, complex transformation to be prepared for the contrastive learning task. The raw features can be mapped directly into the shared multi-modal embedding space with a simple, learned linear map.

Why did they make this choice?

- Simplicity and Efficiency: It’s a simpler architecture with fewer parameters.

- It Just Worked: As the authors state, “We did not notice a difference in training efficiency between the two versions.” This suggests that with their massive dataset and the strong signal from the language-image objective, the extra complexity of the non-linear head was simply unnecessary.

- A Different Problem: They speculate that the non-linear head might be specifically beneficial for image-only self-supervised learning, where the task is to identify two augmented views of the same image. In CLIP’s case, the task is to match a photo with a sentence — a fundamentally different and perhaps clearer signal that doesn’t require the extra transformation.

In the paper, the authors mention that the “temperature parameter… is directly optimized during training.” This might seem like a minor detail, but it’s a clever and pragmatic solution to a notoriously difficult problem in contrastive learning. To understand why, let’s think of this parameter as the model’s “confidence knob.”

1. What is Softmax Temperature?

First, a quick refresher. In classification, a model often outputs raw scores, called logits. The softmax function then converts these logits into a clean probability distribution (a set of numbers that add up to 1).

The temperature (let’s call it T) is a parameter that controls the shape of this probability distribution. It works by dividing the logits before they are fed into the softmax function:

probabilities = softmax(logits / T)

The effect of T is as follows:

- High Temperature (e.g., T > 1): This makes the probabilities “softer” or more uniform. The model becomes less confident. A high

Tshrinks the logits, making the differences between them smaller. For example, logits of[10, 0, -10]might become probabilities of[0.6, 0.2, 0.2]. - Low Temperature (e.g., T < 1): This makes the probabilities “sharper” or more “peaked.” The model becomes more confident. A low

Texaggerates the differences between the logits. The same logits of[10, 0, -10]might become probabilities of[0.99, 0.01, 0.0].

2. The Goldilocks Problem in Contrastive Learning

In CLIP’s contrastive setup, the “logits” are the similarity scores between an image and all the text captions in a batch. The model’s goal is to assign a very high probability to the one correct match. The temperature here is crucial for controlling the learning process:

- If

Tis too high (too soft): The model will be unconfident. The probability of the correct pair will only be slightly higher than the incorrect pairs. This creates a very weak learning signal (a small gradient), and the model will learn very slowly or not at all. - If

Tis too low (too sharp): The model will be overconfident. It might quickly learn to separate the “easy” negative examples (e.g., a dog image vs. the caption “a photo of a car”) but fail to learn from the “hard” negative examples (e.g., a dog image vs. the caption “a photo of a wolf”). This can lead to poor training and a worse final model.

You need a temperature that is “just right”—a value that properly balances the penalties for incorrect pairings and creates a stable, effective learning signal.

3. The Old Way: Expensive and Painful Hyperparameter Tuning

Traditionally, the temperature T is a hyperparameter. This means it’s a value that the data scientist has to choose and set before training begins. How do you find the best value?

The standard method is a brute-force approach like a grid search. You would run a series of small-scale experiments, trying a fixed T of 0.01, then 0.05, then 0.07, then 0.1, and so on. You’d then pick the value that worked best and use it for your final, large-scale training run.

For a model like CLIP, which is trained on 400 million images and takes weeks on hundreds of GPUs, this process is prohibitively expensive. Running multiple experiments just to tune one knob is not a feasible option.

4. CLIP’s Elegant Solution: Let the Model Learn the Knob’s Setting

Instead of manually guessing the best temperature, the CLIP authors did something much smarter: they made the temperature a learnable parameter.

Just like the millions of other weights in the neural network, the temperature T was initialized at some value and then updated at every step of training via backpropagation and gradient descent. The model itself was tasked with figuring out the optimal “confidence level” that best helped it minimize the overall loss. If the model was too unconfident, the loss function would effectively “tell” it to lower the temperature. If it was too overconfident, it would tell it to raise it.

This is more than just a minor tweak; it’s a perfect example of CLIP’s design philosophy:

- It automates a difficult process: It removes the need for the human researcher to perform an expensive and time-consuming hyperparameter search.

- It’s more efficient: It saves a massive amount of computation that would have been wasted on tuning experiments.

- It’s potentially more robust: The model can find a more optimal value for

Tthan a human might through a coarse grid search.

By making the temperature learnable, the authors simplified their training pipeline and made their entire large-scale experiment more practical and likely to succeed.

2.4 Choosing and Scaling a Model

With the dataset and training objective settled, the final piece of the puzzle was the model architecture itself. What kind of neural networks should be used for the image and text encoders, and more importantly, how can they be scaled up effectively to handle the massive dataset?

The Image Encoder: The Workhorse vs. The New Challenger

The authors evaluated two different families of architectures for the image encoder, representing both the established state-of-the-art and a new, promising alternative.

1. The Workhorse: A Modernized ResNet

The first choice was a ResNet-50. The Residual Network (ResNet) architecture is one of the most influential and widely used in computer vision history. It’s a known, reliable “workhorse.” However, the authors didn’t just use the original 2016 version. They made several key modernizations to boost its performance:

- They incorporated ResNet-D improvements, a series of small but effective tweaks to the internal structure of the ResNet blocks.

- They added antialiased blur pooling, a technique that helps the model be less sensitive to small shifts or translations in an image, improving its overall robustness.

- Most interestingly, they replaced the standard “global average pooling” layer with an attention pooling mechanism. Instead of just taking a simple average of all the features at the end of the network, this new layer uses a Transformer-style multi-head attention mechanism to learn a weighted average. In essence, it learns to pay more attention to the most important parts of the image when creating its final summary representation.

2. The New Challenger: The Vision Transformer (ViT) The second architecture they tested was the Vision Transformer (ViT), which was a very new and exciting development at the time. The ViT radically rethinks image processing by applying the Transformer architecture, which was originally designed for text, directly to images. It works by:

- Breaking an image down into a grid of small, fixed-size patches (e.g., 16x16 pixels).

- Treating this sequence of patches as if it were a sequence of words in a sentence.

- Feeding this sequence into a standard Transformer encoder to learn the relationships between the different parts of the image.

The authors followed the original ViT implementation closely, making only a minor modification. By testing both ResNets and ViTs, they were able to compare a mature, highly-optimized CNN against a powerful new paradigm.

The Text Encoder: A Standard Transformer

The choice of text encoder was more straightforward. They used a standard Transformer architecture, very similar to the one used in models like GPT-2. The key details are:

- Architecture: A 12-layer, 512-unit-wide Transformer with 8 attention heads.

- Tokenization: The text is processed using a byte-pair encoding (BPE) tokenizer with a vocabulary of about 49,000 “tokens.” BPE is a clever way to handle language: instead of just splitting words, it breaks words down into common sub-word units. This allows it to represent any word, even ones it has never seen before, without having an enormous vocabulary.

- Processing: For any given text input, the sequence of tokens is capped at 76, bracketed with

[SOS](start of sentence) and[EOS](end of sentence) tokens. The final representation of the[EOS]token is taken as the feature representation for the entire text snippet, as this token’s final state is influenced by all the words that came before it.

The Scaling Strategy: Growing Smarter, Not Just Bigger

How do you make a model more powerful? The naive approach is to just make it deeper (add more layers) or wider (add more units per layer). However, work from the EfficientNet paper (Tan & Le, 2019) showed that the best strategy is compound scaling: simultaneously increasing the model’s depth, width, and the resolution of the input images in a balanced way.

The CLIP authors adapted this sophisticated strategy for their ResNet models. Instead of painstakingly tuning the exact ratio for each dimension, they used a simple rule of thumb: they allocated additional compute equally to increasing the width, depth, and resolution. This allowed them to create a series of progressively larger and more powerful ResNet models in a principled way.

Interestingly, for the text encoder, they found that performance was much less sensitive to its size. As a result, when scaling up their models, they only scaled the width of the text Transformer, keeping its depth (number of layers) constant. This is a great example of the empirical, results-driven engineering required to build such a massive system.

When the CLIP authors chose ResNet as one of their image encoders, they didn’t use the original 2016 version off the shelf. Instead, they incorporated a set of important upgrades from a 2018 paper titled “Bag of Tricks for Image Classification with Convolutional Neural Networks.” One of the key improvements from that paper is a modified architecture known as ResNet-D.

To understand ResNet-D, we first need to understand a subtle flaw in the original ResNet design.

The Flaw in the Original ResNet

A standard ResNet is built from “residual blocks.” These blocks have two paths for information to flow:

- The Main Path: The input goes through a series of convolutional layers.

- The Skip Connection (or Shortcut): The original input “skips” over these layers and is added back in at the end.

In some of these blocks, the convolutional path needs to downsample the image (i.e., reduce its spatial resolution, like from 56x56 to 28x28). The original ResNet did this using a stride of 2 in the very first 1x1 convolution of the main path.

The problem? A 1x1 convolution with a stride of 2 effectively throws away 3/4 of the information in the feature map. It only looks at every other pixel, discarding the rest. While this works, it’s an aggressive and inefficient way to downsample, causing a significant loss of information early in the block.

The ResNet-D Improvement

ResNet-D fixes this by making a simple but clever change to the downsampling blocks:

- It moves the stride of 2 from the initial 1x1 convolution to the 3x3 convolution later in the path.

This small change has a big impact. Now, the 3x3 convolution sees all of the input features (it has a stride of 1), and it performs the downsampling itself. Because a 3x3 convolution looks at a larger area (a 3x3 patch of pixels), it can learn a much more effective and information-preserving way to downsample the feature map, rather than just naively discarding 75% of the data.

This is a perfect example of a “trick” that costs almost nothing in terms of computation but leads to a noticeable improvement in model accuracy by preserving more information as it flows through the network. By incorporating ResNet-D, the CLIP authors ensured their CNN baseline was as strong and modern as possible.

Another key modernization the authors added to their ResNet was antialiased blur pooling. This technique addresses a fundamental problem with how traditional Convolutional Neural Networks (CNNs) handle small shifts in an image.

The Problem: CNNs are Surprisingly Brittle to Shifts

We often think of CNNs as being “translation invariant,” meaning that if you shift an object slightly in an image, the model’s prediction shouldn’t change. In practice, this isn’t entirely true. A standard CNN can be surprisingly sensitive to small, one-pixel shifts.

The culprit is often the max pooling layer (or a convolution with a stride greater than 1), which is used to downsample the feature maps. Imagine a max pooling layer that looks at a 2x2 grid of pixels and outputs the maximum value. If a key feature is right on the edge of that 2x2 grid, a tiny shift in the input image can cause it to fall into a different grid, leading to a completely different output. This can make the network’s internal representations unstable and brittle.

This violates a core principle of signal processing known as Nyquist’s sampling theorem. In simple terms, if you sample a signal (like an image) too aggressively without smoothing it first, you can get aliasing artifacts—unwanted patterns that distort the true signal. This is exactly what a standard max pooling or strided convolution does.

The Solution: Blur First, Then Sample

Antialiased blur pooling (from the 2019 paper “Making Convolutional Networks Shift-Invariant Again”) solves this problem with an incredibly simple and elegant idea inspired by classic signal processing:

- Blur: Before downsampling, apply a small, fixed blurring filter (like a 3x3 triangular filter) to the feature map. This has the effect of smoothing it out.

- Downsample: Now, perform the standard downsampling operation (like max pooling or a strided convolution) on this new, blurred feature map.

By blurring first, you are effectively “spreading out” the features. This makes the downsampling operation much more stable. A small shift in the input will no longer cause a drastic change in the output, because the blurred feature has influence over a slightly larger area.

2.5. Training

With the architecture and scaling strategy defined, the authors now turn to the specifics of the training process. This section highlights the different model configurations they trained and the immense computational resources required.

The Model Zoo: A Fleet of ResNets and ViTs

The authors didn’t just train one final CLIP model. To study how performance scales with compute, they trained a whole family of models of varying sizes. This is crucial for their scientific claim that the benefits of their approach are a predictable function of scale.

They trained a total of eight primary models:

- Five ResNet-based models:

- A standard ResNet-50 and ResNet-101.

- Three much larger, custom ResNets built using their EfficientNet-style compound scaling strategy. These are denoted RN50x4, RN50x16, and RN50x64, representing models that use approximately 4x, 16x, and 64x the compute of the base ResNet-50, respectively.

- Three Vision Transformer (ViT) based models:

- ViT-B/32 (the “Base” ViT model using 32x32 pixel patches).

- ViT-B/16 (the “Base” ViT model using smaller 16x16 patches, which is more powerful).

- ViT-L/14 (the “Large” ViT model using 14x14 patches).

All models were trained for a fixed 32 epochs, meaning they saw the entire 400 million image dataset 32 times.

The Training Recipe: Optimization and Hyperparameters

The training setup used a standard but highly optimized recipe for large-scale deep learning:

- Optimizer: They used the Adam optimizer, a very popular and effective choice, with a specific modification called “decoupled weight decay regularization,” which can improve generalization.

- Learning Rate Schedule: The learning rate, which controls how big of a step the optimizer takes at each iteration, was decayed over the course of training using a cosine schedule. This means the learning rate starts high, gradually and smoothly decreases in a cosine curve, and ends near zero. This is a very common and robust schedule for training large models.

- Hyperparameter Tuning: Interestingly, they note that the initial hyperparameters were found by experimenting on the baseline ResNet-50 model. For the larger, more expensive models, these settings were then “adapted heuristically” due to the massive computational cost of doing a full hyperparameter search for each one. This is a pragmatic admission of the real-world constraints of training at this scale.

- Batch Size: They used an absolutely enormous minibatch size of 32,768. This is one of the keys to training large models efficiently on parallel hardware like GPUs and TPUs. A large batch size ensures that the hardware is fully utilized and that the estimate of the gradient at each step is very stable.

The Engineering of Scale: Making it all Fit

Training a model of this magnitude on a dataset this large requires a suite of advanced engineering techniques to manage memory and speed up computation. The authors used several key methods:

- Mixed-Precision Training: Instead of representing all numbers in the network with 32-bit floating point precision (FP32), this technique uses a mix of lower-precision 16-bit floats (FP16) and FP32. FP16 requires half the memory and is often much faster on modern GPUs. This is a standard and essential trick for large-scale training.

- Gradient Checkpointing: A memory-saving technique where, instead of storing all the intermediate activations needed for backpropagation, the model re-computes them on the fly. This trades extra compute for a significant reduction in memory usage, allowing larger models to be trained.

- Sharded Computation: They note that the calculation of the N x N similarity matrix was “sharded,” meaning it was split up across the individual GPUs. Each GPU only had to compute the similarities between its local batch of images and all the text embeddings, rather than having one GPU compute the entire massive matrix.

Finally, the authors provide a sense of the staggering compute involved:

- The largest ResNet model (RN50x64) took 18 days to train on 592 V100 GPUs.

- The largest Vision Transformer model (ViT-L/14) took 12 days on 256 V100 GPUs.

This section makes it clear that CLIP is not just a scientific breakthrough, but also a monumental feat of engineering.

3. Experiments

3.1 Zero-Shot Transfer

The entire premise of CLIP is that its novel pre-training strategy enables flexible transfer to new tasks. In this section, the authors put that claim to the test. But before they show us the results, they take a moment to define exactly what they mean by “zero-shot transfer” and why their definition represents a more ambitious and meaningful measure of a model’s capabilities.

3.1.1 Motivation: Redefining “Zero-Shot” as True Task Learning

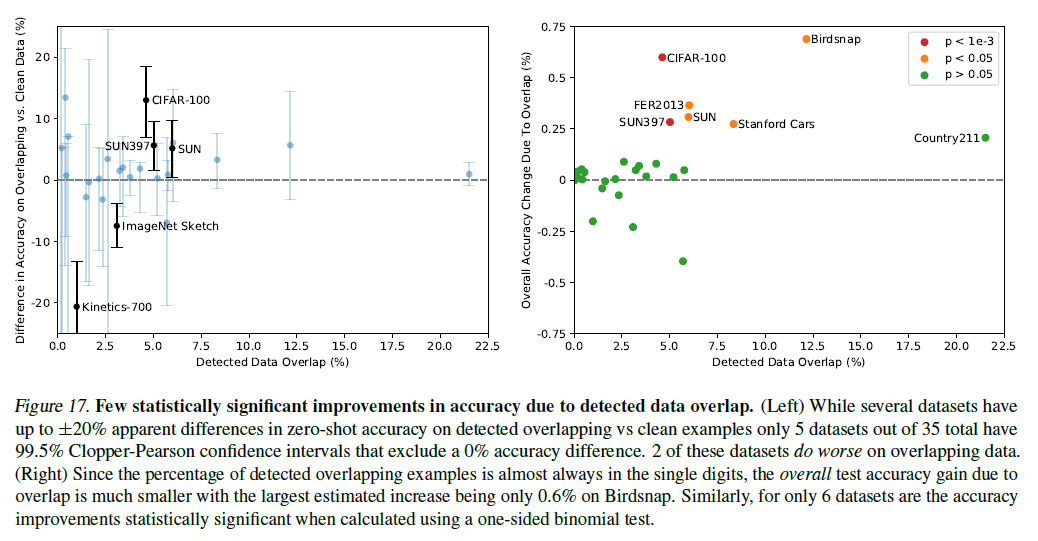

The term “zero-shot learning” can mean different things to different people. The authors begin by clarifying the distinction between the traditional, narrow definition and their own broader, more challenging one.