aws-cli/1.22.97 Python/3.8.12 Linux/5.10.102-99.473.amzn2.x86_64 botocore/1.24.19Deploy Scikit-learn Models to Amazon SageMaker with the SageMaker Python SDK using Script mode

Introduction

You may have trained a model with your favorite ML framework, and now you are asked to move your code to Amazon SageMaker. The good news is that SageMaker’s fully managed training works well with many popular ML frameworks, including scikit-learn. In addition, SageMaker provides its prebuilt container for the scikit-learn framework, enabling us to seamlessly port our scripts to SageMaker and benefit from its training and deployment capabilities. SageMaker’s scikit-learn Container is an open source library for making the scikit-learn framework run on the Amazon SageMaker platform. You can read more about sklearn container features from its GitHub page SageMaker Scikit-learn Container.

Amazon SageMaker also provides open source Python SDK to train and deploy models on SageMaker. SageMaker SDK provides several high-level abstractions (classes), including: * Session Provides a collection of methods for working with SageMaker resources * Estimators Encapsulate training on SageMaker * Predictors Provide real-time inference and transformation using Python data types against a SageMaker endpoint

You can read more on SageMaker Python SDK from its official site Amazon SageMaker Python SDK

This approach of using a custom training script with SageMaker’s prebuilt container is commonly called as Script Mode. To train a scikit-learn model by using the SageMaker Python SDK involves three steps:

- Prepare a training script. The training script is similar to any other scikit-learn training script that you might use outside of SageMaker

- Create an Estimator object from class

sagemaker.sklearn.SKLearn. Scikit-learn estimator class handles end-to-end training and deployment of custom scikit-learn code. We pass our training script to the SKLearn estimator, and it executes the script within a SageMaker Training Job. This training job is an Amazon-built Docker container that runs functions defined in the provided Python script. - Call the Estimator’s

fitmethod on training data. Training is started by callingfit()on this Estimator. After training is complete, callingdeploy()creates a hosted SageMaker endpoint and returns aSKLearnPredictorinstance that can be used to perform inference against the hosted model. We will discuss theSKLearnEstimator in more detail later in this post.

To read more about using scikit-learn with the SageMaker Python SDK, you may refer to the official documentation using Scikit-learn with the SageMaker Python SDK. The official documentation is valuable, and I would highly recommend checking it and keeping it as a reference.

In this post we will built a scikit-learn RandomForrestClassifier on iris public dataset. There is a similar example in SageMaker documentation. Train a SKLearn Model using Script Mode. But it does not discuss many important aspects of a scikit-learn container and its environment. In this post, we will learn about them and cover all the details of training a scikit-learn model with script mode. I also noted that the example in the documentation uses RandomForrestRegressor on a classification problem which I believe is a mistake.

We have much to cover and learn, so let’s start.

Environment

This notebook is prepared with AWS SageMaker notebook running on ml.t3.medium instance and “conda_python3” kernel.

NAME="Amazon Linux"

VERSION="2"

ID="amzn"

ID_LIKE="centos rhel fedora"

VERSION_ID="2"

PRETTY_NAME="Amazon Linux 2"

ANSI_COLOR="0;33"

CPE_NAME="cpe:2.3:o:amazon:amazon_linux:2"

HOME_URL="https://amazonlinux.com/"# conda environments:

#

base /home/ec2-user/anaconda3

JupyterSystemEnv /home/ec2-user/anaconda3/envs/JupyterSystemEnv

R /home/ec2-user/anaconda3/envs/R

amazonei_mxnet_p36 /home/ec2-user/anaconda3/envs/amazonei_mxnet_p36

amazonei_pytorch_latest_p37 /home/ec2-user/anaconda3/envs/amazonei_pytorch_latest_p37

amazonei_tensorflow2_p36 /home/ec2-user/anaconda3/envs/amazonei_tensorflow2_p36

mxnet_p37 /home/ec2-user/anaconda3/envs/mxnet_p37

python3 * /home/ec2-user/anaconda3/envs/python3

pytorch_p38 /home/ec2-user/anaconda3/envs/pytorch_p38

tensorflow2_p38 /home/ec2-user/anaconda3/envs/tensorflow2_p38

Prepare training and test data

We will use Iris flower dataset. It includes three iris species (Iris setosa, Iris virginica, and Iris versicolor) with 50 samples each. Four features were measured for each sample: the length and the width of the sepals and petals, in centimeters. We can train a model to distinguish the species from each other based on the combination of these four features. You can read more about this dataset at Iris flower data set. The dataset has five columns representing. 1. sepal length in cm 2. sepal width in cm 3. petal length in cm 4. petal width in cm 5. class: Iris Setosa, Iris Versicolour, Iris Virginica

Download and preprocess data

##

# download dataset

import boto3

import pandas as pd

import numpy as np

s3 = boto3.client("s3")

s3.download_file(

f"sagemaker-sample-files", "datasets/tabular/iris/iris.data", "iris.data"

)

df = pd.read_csv(

"iris.data",

header=None,

names=["sepal_len", "sepal_wid", "petal_len", "petal_wid", "class"],

)

df.head()| sepal_len | sepal_wid | petal_len | petal_wid | class | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | Iris-setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | Iris-setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | Iris-setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | Iris-setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | Iris-setosa |

##

# Convert the three classes from strings to integers in {0,1,2}

df["class_cat"] = df["class"].astype("category").cat.codes

categories_map = dict(enumerate(df["class"].astype("category").cat.categories))

print(categories_map)

df.head(){0: 'Iris-setosa', 1: 'Iris-versicolor', 2: 'Iris-virginica'}| sepal_len | sepal_wid | petal_len | petal_wid | class | class_cat | |

|---|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | Iris-setosa | 0 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | Iris-setosa | 0 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | Iris-setosa | 0 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | Iris-setosa | 0 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | Iris-setosa | 0 |

Prepare and store train and test sets as CSV files

##

# split the data into train and test set

from sklearn.model_selection import train_test_split

train, test = train_test_split(df, test_size=0.2, random_state=42)

print(f"train.shape: {train.shape}")

print(f"test.shape: {test.shape}")train.shape: (120, 6)

test.shape: (30, 6)We have our dataset ready. Let’s define a local directory local_path to keep all the files and artifacts related to this post. I will refer to this directory as ‘workspace’.

We have train and test sets ready. Let’s create two more directories in our workspace and store our data in them.

from pathlib import Path

# local paths

local_train_path = local_path + "/train"

local_test_path = local_path + "/test"

# create local directories

Path(local_train_path).mkdir(parents=True, exist_ok=True)

Path(local_test_path).mkdir(parents=True, exist_ok=True)

print("local_train_path: ", local_train_path)

print("local_test_path: ", local_test_path)

# local file names

local_train_file = local_train_path + "/train.csv"

local_test_file = local_test_path + "/test.csv"

# write train and test CSV files

train.to_csv(local_train_file, index=False)

test.to_csv(local_test_file, index=False)

print("local_train_file: ", local_train_file)

print("local_test_file: ", local_test_file)local_train_path: ./datasets/2022-07-07-sagemaker-script-mode/train

local_test_path: ./datasets/2022-07-07-sagemaker-script-mode/test

local_train_file: ./datasets/2022-07-07-sagemaker-script-mode/train/train.csv

local_test_file: ./datasets/2022-07-07-sagemaker-script-mode/test/test.csvCreate SageMaker session

import sagemaker

session = sagemaker.Session()

role = sagemaker.get_execution_role()

bucket = session.default_bucket()

region = session.boto_region_name

print("sagemaker.__version__: ", sagemaker.__version__)

print("Session: ", session)

print("Role: ", role)

print("Bucket: ", bucket)

print("Region: ", region)sagemaker.__version__: 2.86.2

Session: <sagemaker.session.Session object at 0x7f80ad720460>

Role: arn:aws:iam::801598032724:role/service-role/AmazonSageMakerServiceCatalogProductsUseRole

Bucket: sagemaker-us-east-1-801598032724

Region: us-east-1What we have done here is * imported the SageMaker Python SDK into our runtime * get a session to work with SageMaker API and other AWS services * get the execution role associated with the user profile. It is the same profile that is available to the user to work from console UI and has AmazonSageMakerFullAccess policy attached to it. * create or get a default bucket to use and return its name. Default bucket name has the format sagemaker-{region}-{account_id}. If it doesn’t exist then our session will automatically create it. You may also use any other bucket in its place given that you have enough permission for reading and writing. * get the region name attached to our session

Next, we will use this session to upload data to our default bucket.

Upload data to Amazon S3 bucket

Now upload the data. In the output, we will get the complete path (S3 URI) for our uploaded data.

s3_train_uri = session.upload_data(local_train_file, key_prefix=bucket_prefix + "/data")

s3_test_uri = session.upload_data(local_test_file, key_prefix=bucket_prefix + "/data")

print("s3_train_uri: ", s3_train_uri)

print("s3_test_uri: ", s3_test_uri)s3_train_uri: s3://sagemaker-us-east-1-801598032724/2022-07-07-sagemaker-script-mode/data/train.csv

s3_test_uri: s3://sagemaker-us-east-1-801598032724/2022-07-07-sagemaker-script-mode/data/test.csvAt this point, our data preparation step is complete. Train and test CSV files are available on the local system and in our default Amazon S3 bucket.

Prepare SageMaker local environment

The Amazon SageMaker training environment is managed, but SageMaker Python SDK also supports local mode, allowing you to train and deploy models to your local environment. This is a great way to test training scripts before running them in SageMaker’s managed training or hosting environment.

How SageMaker managed environment works?

When you send a request to SageMaker API (fit or deploy call) * it spins up new instances with the provided specification * loads the algorithm container * pulls the data from S3 * runs the training code * store the results and trained model artifacts to S3 * terminates the new instances

All this happens behind the scenes with a single line of code and is a huge advantage. Spinning up new hardware every time can be good for repeatability and security, but it can add some friction while testing and debugging our code. We can test our code on a small dataset in our local environment with SageMaker local mode and then switch seamlessly to SageMaker managed environment by changing a single line of code.

Steps to prepare Amazon SageMaker local environment

Install the following pre-requisites if you want to set up Amazon SageMaker on your local system. 1. Install required Python packages: pip install boto3 sagemaker pandas scikit-learn pip install 'sagemaker[local]' 2. Docker Desktop installed and running on your computer: docker ps 3. You should have AWS credentials configured on your local machine to be able to pull the docker image from ECR.

Instructions for SageMaker notebook instances

You can also set up SageMaker’s local environment in SageMaker notebook instances. Required Python packages and Docker service is already there. You only need to upgrade the sagemaker[local] Python package.

#collapse_output

# this is required for SageMaker notebook instances

!pip install 'sagemaker[local]' --upgradeLooking in indexes: https://pypi.org/simple, https://pip.repos.neuron.amazonaws.com

Requirement already satisfied: sagemaker[local] in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (2.86.2)

Collecting sagemaker[local]

Downloading sagemaker-2.99.0.tar.gz (542 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 542.7/542.7 KB 10.6 MB/s eta 0:00:0000:01

Preparing metadata (setup.py) ... done

Requirement already satisfied: attrs<22,>=20.3.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (20.3.0)

Requirement already satisfied: boto3<2.0,>=1.20.21 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.21.42)

Requirement already satisfied: google-pasta in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (0.2.0)

Requirement already satisfied: numpy<2.0,>=1.9.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.20.3)

Requirement already satisfied: protobuf<4.0,>=3.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (3.19.1)

Requirement already satisfied: protobuf3-to-dict<1.0,>=0.1.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (0.1.5)

Requirement already satisfied: smdebug_rulesconfig==1.0.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.0.1)

Requirement already satisfied: importlib-metadata<5.0,>=1.4.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (4.8.2)

Requirement already satisfied: packaging>=20.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (21.3)

Requirement already satisfied: pandas in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.3.4)

Requirement already satisfied: pathos in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (0.2.8)

Requirement already satisfied: urllib3==1.26.8 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.26.8)

Requirement already satisfied: docker-compose==1.29.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (1.29.2)

Requirement already satisfied: docker~=5.0.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (5.0.3)

Requirement already satisfied: PyYAML==5.4.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from sagemaker[local]) (5.4.1)

Requirement already satisfied: texttable<2,>=0.9.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (1.6.4)

Requirement already satisfied: websocket-client<1,>=0.32.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (0.59.0)

Requirement already satisfied: docopt<1,>=0.6.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (0.6.2)

Requirement already satisfied: jsonschema<4,>=2.5.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (3.2.0)

Requirement already satisfied: dockerpty<1,>=0.4.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (0.4.1)

Requirement already satisfied: distro<2,>=1.5.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (1.7.0)

Requirement already satisfied: python-dotenv<1,>=0.13.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (0.20.0)

Requirement already satisfied: requests<3,>=2.20.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker-compose==1.29.2->sagemaker[local]) (2.26.0)

Collecting botocore<1.25.0,>=1.24.42

Downloading botocore-1.24.46-py3-none-any.whl (8.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 8.7/8.7 MB 34.3 MB/s eta 0:00:00:00:0100:01

Requirement already satisfied: s3transfer<0.6.0,>=0.5.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from boto3<2.0,>=1.20.21->sagemaker[local]) (0.5.2)

Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from boto3<2.0,>=1.20.21->sagemaker[local]) (0.10.0)

Requirement already satisfied: zipp>=0.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from importlib-metadata<5.0,>=1.4.0->sagemaker[local]) (3.6.0)

Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from packaging>=20.0->sagemaker[local]) (3.0.6)

Requirement already satisfied: six in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from protobuf3-to-dict<1.0,>=0.1.5->sagemaker[local]) (1.16.0)

Requirement already satisfied: python-dateutil>=2.7.3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pandas->sagemaker[local]) (2.8.2)

Requirement already satisfied: pytz>=2017.3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pandas->sagemaker[local]) (2021.3)

Requirement already satisfied: multiprocess>=0.70.12 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pathos->sagemaker[local]) (0.70.12.2)

Requirement already satisfied: pox>=0.3.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pathos->sagemaker[local]) (0.3.0)

Requirement already satisfied: dill>=0.3.4 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pathos->sagemaker[local]) (0.3.4)

Requirement already satisfied: ppft>=1.6.6.4 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from pathos->sagemaker[local]) (1.6.6.4)

Requirement already satisfied: paramiko>=2.4.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from docker~=5.0.0->sagemaker[local]) (2.10.3)

Requirement already satisfied: pyrsistent>=0.14.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from jsonschema<4,>=2.5.1->docker-compose==1.29.2->sagemaker[local]) (0.18.0)

Requirement already satisfied: setuptools in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from jsonschema<4,>=2.5.1->docker-compose==1.29.2->sagemaker[local]) (59.4.0)

Requirement already satisfied: charset-normalizer~=2.0.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from requests<3,>=2.20.0->docker-compose==1.29.2->sagemaker[local]) (2.0.8)

Requirement already satisfied: idna<4,>=2.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from requests<3,>=2.20.0->docker-compose==1.29.2->sagemaker[local]) (3.1)

Requirement already satisfied: certifi>=2017.4.17 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from requests<3,>=2.20.0->docker-compose==1.29.2->sagemaker[local]) (2021.10.8)

Requirement already satisfied: pynacl>=1.0.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from paramiko>=2.4.2->docker~=5.0.0->sagemaker[local]) (1.5.0)

Requirement already satisfied: cryptography>=2.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from paramiko>=2.4.2->docker~=5.0.0->sagemaker[local]) (36.0.0)

Requirement already satisfied: bcrypt>=3.1.3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from paramiko>=2.4.2->docker~=5.0.0->sagemaker[local]) (3.2.0)

Requirement already satisfied: cffi>=1.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from bcrypt>=3.1.3->paramiko>=2.4.2->docker~=5.0.0->sagemaker[local]) (1.15.0)

Requirement already satisfied: pycparser in /home/ec2-user/anaconda3/envs/python3/lib/python3.8/site-packages (from cffi>=1.1->bcrypt>=3.1.3->paramiko>=2.4.2->docker~=5.0.0->sagemaker[local]) (2.21)

Building wheels for collected packages: sagemaker

Building wheel for sagemaker (setup.py) ... done

Created wheel for sagemaker: filename=sagemaker-2.99.0-py2.py3-none-any.whl size=756462 sha256=309b5159cfb7f5c739c6159b8bf309bfa7ce28d2ca402296e824f3e84bc837c1

Stored in directory: /home/ec2-user/.cache/pip/wheels/fc/df/14/14b7871f4cf108cfe8891338510d97e28cfe2da00f37114fcf

Successfully built sagemaker

Installing collected packages: botocore, sagemaker

Attempting uninstall: botocore

Found existing installation: botocore 1.24.19

Uninstalling botocore-1.24.19:

Successfully uninstalled botocore-1.24.19

Attempting uninstall: sagemaker

Found existing installation: sagemaker 2.86.2

Uninstalling sagemaker-2.86.2:

Successfully uninstalled sagemaker-2.86.2

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

awscli 1.22.97 requires botocore==1.24.42, but you have botocore 1.24.46 which is incompatible.

aiobotocore 2.0.1 requires botocore<1.22.9,>=1.22.8, but you have botocore 1.24.46 which is incompatible.

Successfully installed botocore-1.24.46 sagemaker-2.99.0

WARNING: You are using pip version 22.0.4; however, version 22.1.2 is available.

You should consider upgrading via the '/home/ec2-user/anaconda3/envs/python3/bin/python -m pip install --upgrade pip' command.

Instructions for SageMaker Studio environment

Note that SageMaker local mode will not work in SageMaker Studio environment as it does not have docker service installed on the provided instances.

Create SageMaker local session

SageMaker local session is required for working in a local environment. Let’s create it.

<sagemaker.local.local_session.LocalSession at 0x7f80ac223910>Prepare SageMaker training script

We will call our training script train_and_serve.py and place it in our workspace under the /src folder. Then, we will start with a simple Hello World message code. After that, we will update and complete our training script as we learn more about the SageMaker scikit-learn container environment.

script_file_name = "train_and_serve.py"

script_path = local_path + "/src"

script_file = script_path + "/" + script_file_name

print("script_file_name: ", script_file_name)

print("script_path: ", script_path)

print("script_file: ", script_file)script_file_name: train_and_serve.py

script_path: ./datasets/2022-07-07-sagemaker-script-mode/src

script_file: ./datasets/2022-07-07-sagemaker-script-mode/src/train_and_serve.pyNow the training script.

Prepare SageMaker SKLearn estimator

To create SKLearn Estimator object we need to pass it following items * entry_point (str) Path (absolute or relative) to the Python source file, which should be executed as the entry point to training * framework_version (str) Scikit-learn version you want to use for executing your model training code * role (str) An AWS IAM role (either name or full ARN) * instance_type (str) Type of instance to use for training. For local mode use string local * instance_count (int) Number of instances to use for training. Since we will train in the local environment and have a single instance, we will use ‘1’ here

You can read more about the SKLearn Estimator class from the official documentation Scikit Learn Estimator

Let’s find the SKLearn framework version.

Note that version number 1.0.1 has to be provided to the SKLearn estimator class as 1.0-1. Otherwise, you will get the following error message.

ValueError: Unsupported sklearn version: 1.0.1. You may need to upgrade your SDK version (pip install -U sagemaker) for newer sklearn versions. Supported sklearn version(s): 0.20.0, 0.23-1, 1.0-1.Now let us create the SageMaker SKLearn estimator object and pass our training script to it.

#collapse-output

from sagemaker.sklearn import SKLearn

sk_estimator = SKLearn(

entry_point=script_file,

role=role,

instance_count=1,

instance_type="local",

framework_version="1.0-1"

)

sk_estimator.fit()WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /home/ec2-user/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Creating fvm7gkf0bq-algo-1-ju7k8 ... Creating fvm7gkf0bq-algo-1-ju7k8 ... done Attaching to fvm7gkf0bq-algo-1-ju7k8 fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,041 sagemaker-containers INFO Imported framework sagemaker_sklearn_container.training fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,045 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,054 sagemaker_sklearn_container.training INFO Invoking user training script. fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,272 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,284 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,297 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,306 sagemaker-training-toolkit INFO Invoking user script fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | Training Env: fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | { fvm7gkf0bq-algo-1-ju7k8 | "additional_framework_parameters": {}, fvm7gkf0bq-algo-1-ju7k8 | "channel_input_dirs": {}, fvm7gkf0bq-algo-1-ju7k8 | "current_host": "algo-1-ju7k8", fvm7gkf0bq-algo-1-ju7k8 | "framework_module": "sagemaker_sklearn_container.training:main", fvm7gkf0bq-algo-1-ju7k8 | "hosts": [ fvm7gkf0bq-algo-1-ju7k8 | "algo-1-ju7k8" fvm7gkf0bq-algo-1-ju7k8 | ], fvm7gkf0bq-algo-1-ju7k8 | "hyperparameters": {}, fvm7gkf0bq-algo-1-ju7k8 | "input_config_dir": "/opt/ml/input/config", fvm7gkf0bq-algo-1-ju7k8 | "input_data_config": {}, fvm7gkf0bq-algo-1-ju7k8 | "input_dir": "/opt/ml/input", fvm7gkf0bq-algo-1-ju7k8 | "is_master": true, fvm7gkf0bq-algo-1-ju7k8 | "job_name": "sagemaker-scikit-learn-2022-07-17-15-22-17-814", fvm7gkf0bq-algo-1-ju7k8 | "log_level": 20, fvm7gkf0bq-algo-1-ju7k8 | "master_hostname": "algo-1-ju7k8", fvm7gkf0bq-algo-1-ju7k8 | "model_dir": "/opt/ml/model", fvm7gkf0bq-algo-1-ju7k8 | "module_dir": "s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-22-17-814/source/sourcedir.tar.gz", fvm7gkf0bq-algo-1-ju7k8 | "module_name": "train_and_serve", fvm7gkf0bq-algo-1-ju7k8 | "network_interface_name": "eth0", fvm7gkf0bq-algo-1-ju7k8 | "num_cpus": 2, fvm7gkf0bq-algo-1-ju7k8 | "num_gpus": 0, fvm7gkf0bq-algo-1-ju7k8 | "output_data_dir": "/opt/ml/output/data", fvm7gkf0bq-algo-1-ju7k8 | "output_dir": "/opt/ml/output", fvm7gkf0bq-algo-1-ju7k8 | "output_intermediate_dir": "/opt/ml/output/intermediate", fvm7gkf0bq-algo-1-ju7k8 | "resource_config": { fvm7gkf0bq-algo-1-ju7k8 | "current_host": "algo-1-ju7k8", fvm7gkf0bq-algo-1-ju7k8 | "hosts": [ fvm7gkf0bq-algo-1-ju7k8 | "algo-1-ju7k8" fvm7gkf0bq-algo-1-ju7k8 | ] fvm7gkf0bq-algo-1-ju7k8 | }, fvm7gkf0bq-algo-1-ju7k8 | "user_entry_point": "train_and_serve.py" fvm7gkf0bq-algo-1-ju7k8 | } fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | Environment variables: fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | SM_HOSTS=["algo-1-ju7k8"] fvm7gkf0bq-algo-1-ju7k8 | SM_NETWORK_INTERFACE_NAME=eth0 fvm7gkf0bq-algo-1-ju7k8 | SM_HPS={} fvm7gkf0bq-algo-1-ju7k8 | SM_USER_ENTRY_POINT=train_and_serve.py fvm7gkf0bq-algo-1-ju7k8 | SM_FRAMEWORK_PARAMS={} fvm7gkf0bq-algo-1-ju7k8 | SM_RESOURCE_CONFIG={"current_host":"algo-1-ju7k8","hosts":["algo-1-ju7k8"]} fvm7gkf0bq-algo-1-ju7k8 | SM_INPUT_DATA_CONFIG={} fvm7gkf0bq-algo-1-ju7k8 | SM_OUTPUT_DATA_DIR=/opt/ml/output/data fvm7gkf0bq-algo-1-ju7k8 | SM_CHANNELS=[] fvm7gkf0bq-algo-1-ju7k8 | SM_CURRENT_HOST=algo-1-ju7k8 fvm7gkf0bq-algo-1-ju7k8 | SM_MODULE_NAME=train_and_serve fvm7gkf0bq-algo-1-ju7k8 | SM_LOG_LEVEL=20 fvm7gkf0bq-algo-1-ju7k8 | SM_FRAMEWORK_MODULE=sagemaker_sklearn_container.training:main fvm7gkf0bq-algo-1-ju7k8 | SM_INPUT_DIR=/opt/ml/input fvm7gkf0bq-algo-1-ju7k8 | SM_INPUT_CONFIG_DIR=/opt/ml/input/config fvm7gkf0bq-algo-1-ju7k8 | SM_OUTPUT_DIR=/opt/ml/output fvm7gkf0bq-algo-1-ju7k8 | SM_NUM_CPUS=2 fvm7gkf0bq-algo-1-ju7k8 | SM_NUM_GPUS=0 fvm7gkf0bq-algo-1-ju7k8 | SM_MODEL_DIR=/opt/ml/model fvm7gkf0bq-algo-1-ju7k8 | SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-22-17-814/source/sourcedir.tar.gz fvm7gkf0bq-algo-1-ju7k8 | SM_TRAINING_ENV={"additional_framework_parameters":{},"channel_input_dirs":{},"current_host":"algo-1-ju7k8","framework_module":"sagemaker_sklearn_container.training:main","hosts":["algo-1-ju7k8"],"hyperparameters":{},"input_config_dir":"/opt/ml/input/config","input_data_config":{},"input_dir":"/opt/ml/input","is_master":true,"job_name":"sagemaker-scikit-learn-2022-07-17-15-22-17-814","log_level":20,"master_hostname":"algo-1-ju7k8","model_dir":"/opt/ml/model","module_dir":"s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-22-17-814/source/sourcedir.tar.gz","module_name":"train_and_serve","network_interface_name":"eth0","num_cpus":2,"num_gpus":0,"output_data_dir":"/opt/ml/output/data","output_dir":"/opt/ml/output","output_intermediate_dir":"/opt/ml/output/intermediate","resource_config":{"current_host":"algo-1-ju7k8","hosts":["algo-1-ju7k8"]},"user_entry_point":"train_and_serve.py"} fvm7gkf0bq-algo-1-ju7k8 | SM_USER_ARGS=[] fvm7gkf0bq-algo-1-ju7k8 | SM_OUTPUT_INTERMEDIATE_DIR=/opt/ml/output/intermediate fvm7gkf0bq-algo-1-ju7k8 | PYTHONPATH=/opt/ml/code:/miniconda3/bin:/miniconda3/lib/python38.zip:/miniconda3/lib/python3.8:/miniconda3/lib/python3.8/lib-dynload:/miniconda3/lib/python3.8/site-packages fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | Invoking script with the following command: fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | /miniconda3/bin/python train_and_serve.py fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | fvm7gkf0bq-algo-1-ju7k8 | *** Hello from the SageMaker script mode*** fvm7gkf0bq-algo-1-ju7k8 | 2022-07-17 15:23:43,332 sagemaker-containers INFO Reporting training SUCCESS fvm7gkf0bq-algo-1-ju7k8 exited with code 0 Aborting on container exit... ===== Job Complete =====

##

# The estimator will pick a local session when we use instance_type='local'

sk_estimator.sagemaker_session<sagemaker.local.local_session.LocalSession at 0x7f80ac53da90>When you first run the SKLearn estimator, executing it may take some time as it has to download the scikit-learn container to the local docker environment. You will get the container logs in the output when the container completes the execution. The logs show that the container has successfully run the training script, and the hello message is also printed. But there is a lot more information available in the logs. We will discuss it in the coming section.

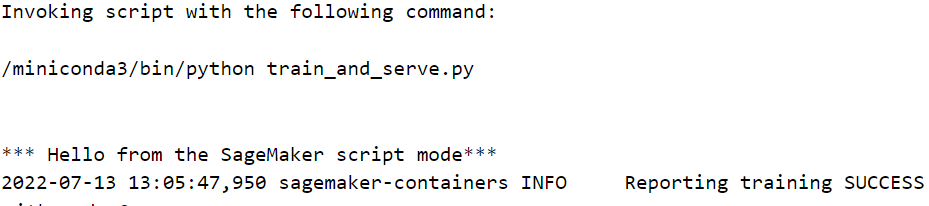

Understanding SKLearn container output and environment varaibles

From the SKLearn estimator output, we can see that our train_and_serve.py script is executed by the container with the following command.

/miniconda3/bin/python train_and_serve.pyInspecting SageMaker SKLearn docker image

Since the container was executed in the local environment, we can also inspect the SageMaker SKLearn local image.

REPOSITORY TAG IMAGE ID CREATED SIZE

683313688378.dkr.ecr.us-east-1.amazonaws.com/sagemaker-scikit-learn 1.0-1-cpu-py3 8a6ea8272ad0 10 days ago 3.7GBLet’s also inspect the docker image. Notice multiple container environment variables and their default values in the output.

[

{

"Id": "sha256:8a6ea8272ad003ec816569b0f879b16c770116584301161565f065aadb99436c",

"RepoTags": [

"683313688378.dkr.ecr.us-east-1.amazonaws.com/sagemaker-scikit-learn:1.0-1-cpu-py3"

],

"RepoDigests": [

"683313688378.dkr.ecr.us-east-1.amazonaws.com/sagemaker-scikit-learn@sha256:fc8c3a617ff0e436c25f3b64d03e1f485f1d159478c26757f3d1d267fc849445"

],

"Parent": "",

"Comment": "",

"Created": "2022-07-06T18:55:02.854297671Z",

"Container": "11b9a5fec2d61294aee63e549100ed18ceb7aa0de6a4ff198da2f556dfe3ec2f",

"ContainerConfig": {

"Hostname": "11b9a5fec2d6",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/miniconda3/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"PYTHONDONTWRITEBYTECODE=1",

"PYTHONUNBUFFERED=1",

"PYTHONIOENCODING=UTF-8",

"LANG=C.UTF-8",

"LC_ALL=C.UTF-8",

"SAGEMAKER_SKLEARN_VERSION=1.0-1",

"SAGEMAKER_TRAINING_MODULE=sagemaker_sklearn_container.training:main",

"SAGEMAKER_SERVING_MODULE=sagemaker_sklearn_container.serving:main",

"SKLEARN_MMS_CONFIG=/home/model-server/config.properties",

"SM_INPUT=/opt/ml/input",

"SM_INPUT_TRAINING_CONFIG_FILE=/opt/ml/input/config/hyperparameters.json",

"SM_INPUT_DATA_CONFIG_FILE=/opt/ml/input/config/inputdataconfig.json",

"SM_CHECKPOINT_CONFIG_FILE=/opt/ml/input/config/checkpointconfig.json",

"SM_MODEL_DIR=/opt/ml/model",

"TEMP=/home/model-server/tmp"

],

"Cmd": [

"/bin/sh",

"-c",

"#(nop) ",

"LABEL transform_id=9be8b540-703b-4ecd-a127-c37333a0dcec_sagemaker-scikit-learn-1_0"

],

"Image": "sha256:58b15b990d550868caed6f885423deee97a6c7f525c228a043096bf28e775d18",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"TRANSFORM_TYPE": "Aggregate-1.0",

"VERSION_SET_NAME": "SMFrameworksSKLearn/release-cdk",

"VERSION_SET_REVISION": "6086988568",

"com.amazonaws.sagemaker.capabilities.accept-bind-to-port": "true",

"com.amazonaws.sagemaker.capabilities.multi-models": "true",

"transform_id": "9be8b540-703b-4ecd-a127-c37333a0dcec_sagemaker-scikit-learn-1_0"

}

},

"DockerVersion": "20.10.15",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/miniconda3/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"PYTHONDONTWRITEBYTECODE=1",

"PYTHONUNBUFFERED=1",

"PYTHONIOENCODING=UTF-8",

"LANG=C.UTF-8",

"LC_ALL=C.UTF-8",

"SAGEMAKER_SKLEARN_VERSION=1.0-1",

"SAGEMAKER_TRAINING_MODULE=sagemaker_sklearn_container.training:main",

"SAGEMAKER_SERVING_MODULE=sagemaker_sklearn_container.serving:main",

"SKLEARN_MMS_CONFIG=/home/model-server/config.properties",

"SM_INPUT=/opt/ml/input",

"SM_INPUT_TRAINING_CONFIG_FILE=/opt/ml/input/config/hyperparameters.json",

"SM_INPUT_DATA_CONFIG_FILE=/opt/ml/input/config/inputdataconfig.json",

"SM_CHECKPOINT_CONFIG_FILE=/opt/ml/input/config/checkpointconfig.json",

"SM_MODEL_DIR=/opt/ml/model",

"TEMP=/home/model-server/tmp"

],

"Cmd": [

"bash"

],

"Image": "sha256:58b15b990d550868caed6f885423deee97a6c7f525c228a043096bf28e775d18",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"TRANSFORM_TYPE": "Aggregate-1.0",

"VERSION_SET_NAME": "SMFrameworksSKLearn/release-cdk",

"VERSION_SET_REVISION": "6086988568",

"com.amazonaws.sagemaker.capabilities.accept-bind-to-port": "true",

"com.amazonaws.sagemaker.capabilities.multi-models": "true",

"transform_id": "9be8b540-703b-4ecd-a127-c37333a0dcec_sagemaker-scikit-learn-1_0"

}

},

"Architecture": "amd64",

"Os": "linux",

"Size": 3699696670,

"VirtualSize": 3699696670,

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/01a97258168fa360e9f6aa63ac0c6b2417c0ea0ebe888123edad87eb4a646765/diff:/var/lib/docker/overlay2/3b85b71e8fe52c7a27ae71ed492ff72c7e430cccdeea17046e2a361e8d7fd960/diff:/var/lib/docker/overlay2/7de8e16dd696c868ffd028a3ba1f1a80ef04237b9323229e578bc5e3aa6a29d7/diff:/var/lib/docker/overlay2/5eeb27014ab7ac7a894efdbb166d8a87fb9d4b8b739eccd82546ad6a2b53aa70/diff:/var/lib/docker/overlay2/bbd9a81a7aa5bf4c79e81ecf47670a3f8c098eee9c6682f36f88ec52db8e1946/diff:/var/lib/docker/overlay2/eb0e7f3a5bd45c1d611e4c37ba641d1e978043954312da5908fd4003c41c7e7d/diff:/var/lib/docker/overlay2/3daaedc78711e353befc51544a944ad35954327325d056094f445502bf65ce53/diff:/var/lib/docker/overlay2/9dd41e3edfb9d8f852732a968a7b179ca811e0f9d55614a0b193de753fc6aca0/diff:/var/lib/docker/overlay2/ede189a574c79eebc565041a44ebf8b586247a36a99fe3ff9588b8c940783498/diff:/var/lib/docker/overlay2/6b1d78a9c074a42d78650406b90b7b4f51eb31660a7b1e2dcc6d73cc43d29b6b/diff:/var/lib/docker/overlay2/3e0420f6740f876c9355d526cbdedd9ebde5be94ddf0d93d7dadd4f34cae351b/diff:/var/lib/docker/overlay2/de1a2da7ee1b5d9a1b4e5c3dd1adff213185dde7e1212db96c0435e512f50701/diff:/var/lib/docker/overlay2/bebca69aef394f0553634413c7875eb58228c7e6359a305a7501705e75c2b58b/diff:/var/lib/docker/overlay2/8a410db2a038a175ee6ddfb005383f8776c80b1b1901f5d2feedfc8d837ffa40/diff:/var/lib/docker/overlay2/6f6686a8cb3ccf47b214854717cbe33ba777e0985200e3d7b7f761f99231b274/diff:/var/lib/docker/overlay2/ad8b24fa9173d28a83284e4f31d830f1b3d9fe30a3fcc8cbb37895ec2fded7bf/diff:/var/lib/docker/overlay2/e8b0842f0da5b0dbb5076e350bfe1a70ef291546bbbf207fe1f90ae7ccd64517/diff",

"MergedDir": "/var/lib/docker/overlay2/632d2d4d01646bd8be2ec147edc70eb44f59fb262aa12b217fd560c464edd4cb/merged",

"UpperDir": "/var/lib/docker/overlay2/632d2d4d01646bd8be2ec147edc70eb44f59fb262aa12b217fd560c464edd4cb/diff",

"WorkDir": "/var/lib/docker/overlay2/632d2d4d01646bd8be2ec147edc70eb44f59fb262aa12b217fd560c464edd4cb/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:1dc52a6b4de8561423dd3ec5a1f7f77f5309fd8cb340f80b8bc3d87fa112003e",

"sha256:b13a10ce059365d68a2113e9dbcac05b17b51f181615fca6d717a0dcf9ba8ffb",

"sha256:790d00cf365a312488151b354f0b0ae826be031edffb8a4de6a1fab048774dc7",

"sha256:323e43c53a1cd5abbd55437588f19da04f716452bc6d05486759b35f3e485390",

"sha256:c99c9d462af0bac5511ed046178ab0de79b8cdad33cd85246e9f661e098426cd",

"sha256:4a3a4d9fb4d250b1b64629b23bc0a477a45ee2659a8410d59a31a181dad70002",

"sha256:27b35f432a27e5e275038e559ebbe1aa7e91447bf417f5da01e3326739ba9366",

"sha256:ee12325fe0b7e7930b76d9a3dc81fcc37fa51a3267b311d2ed7c38703f193d75",

"sha256:7ceb40593535cdc07299efa2ce3a2c2267c2fa683161515fd6ab97f733492bf0",

"sha256:f18dbe0eec054f0aedf54a94aa29dab0d2c0f3d920fb482c99819622b0094f47",

"sha256:df2a7845ea611463f9f3282ccb45156ba883f40b15013ee49bd0a569301738d8",

"sha256:bcbd5416b87e3e37e05c22e46cbff2e3503d9caa0ec283a44931dc63e51c8cb7",

"sha256:5bcbb3ccae766c8a72d98ce494500bfd44c32e5780a1cb153139a4c5c143a8d5",

"sha256:4ecc8a8ffa902f3ea9bebb8d610e02a32ce1ca94c1a3160a31da98b73c1f55a0",

"sha256:a7a7b8b26735eb2d137fd0f91b83c73ad48cf2c4b83e9d0cadece410d6e598ba",

"sha256:ae939a0c9d32674ad6674947853ecfda4ff0530a8137960064448ae5e45fa1c5",

"sha256:6948f39c8f3cf6ec104734ccd1112fcb4af85a7c26c9c3d43495494b9b799f25",

"sha256:affd18c8e88f35e75bd02158e0418f3aeb4eec4269a208ede24cc829fa88c850"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}

}

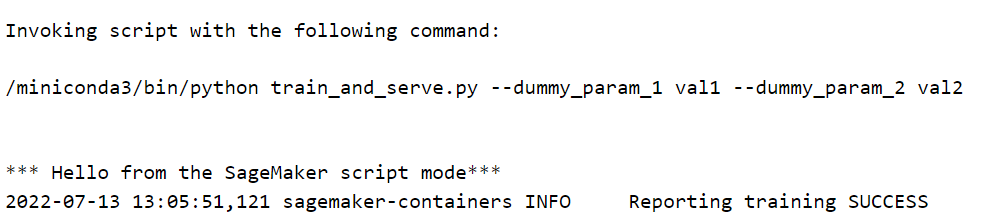

]Pass hyperparameters to SKLearn estimator

Let’s pass some dummy hyperparameters to the estimator and see how it affects the output.

#collapse-output

sk_estimator = SKLearn(

entry_point=script_file,

role=role,

instance_count=1,

instance_type='local',

framework_version="1.0-1",

hyperparameters={"dummy_param_1":"val1","dummy_param_2":"val2"},

)

sk_estimator.fit()Creating kc4ahx6e84-algo-1-8m8ve ... Creating kc4ahx6e84-algo-1-8m8ve ... done Attaching to kc4ahx6e84-algo-1-8m8ve kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,385 sagemaker-containers INFO Imported framework sagemaker_sklearn_container.training kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,389 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,398 sagemaker_sklearn_container.training INFO Invoking user training script. kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,595 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,608 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,621 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,630 sagemaker-training-toolkit INFO Invoking user script kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | Training Env: kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | { kc4ahx6e84-algo-1-8m8ve | "additional_framework_parameters": {}, kc4ahx6e84-algo-1-8m8ve | "channel_input_dirs": {}, kc4ahx6e84-algo-1-8m8ve | "current_host": "algo-1-8m8ve", kc4ahx6e84-algo-1-8m8ve | "framework_module": "sagemaker_sklearn_container.training:main", kc4ahx6e84-algo-1-8m8ve | "hosts": [ kc4ahx6e84-algo-1-8m8ve | "algo-1-8m8ve" kc4ahx6e84-algo-1-8m8ve | ], kc4ahx6e84-algo-1-8m8ve | "hyperparameters": { kc4ahx6e84-algo-1-8m8ve | "dummy_param_1": "val1", kc4ahx6e84-algo-1-8m8ve | "dummy_param_2": "val2" kc4ahx6e84-algo-1-8m8ve | }, kc4ahx6e84-algo-1-8m8ve | "input_config_dir": "/opt/ml/input/config", kc4ahx6e84-algo-1-8m8ve | "input_data_config": {}, kc4ahx6e84-algo-1-8m8ve | "input_dir": "/opt/ml/input", kc4ahx6e84-algo-1-8m8ve | "is_master": true, kc4ahx6e84-algo-1-8m8ve | "job_name": "sagemaker-scikit-learn-2022-07-17-15-23-44-284", kc4ahx6e84-algo-1-8m8ve | "log_level": 20, kc4ahx6e84-algo-1-8m8ve | "master_hostname": "algo-1-8m8ve", kc4ahx6e84-algo-1-8m8ve | "model_dir": "/opt/ml/model", kc4ahx6e84-algo-1-8m8ve | "module_dir": "s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-44-284/source/sourcedir.tar.gz", kc4ahx6e84-algo-1-8m8ve | "module_name": "train_and_serve", kc4ahx6e84-algo-1-8m8ve | "network_interface_name": "eth0", kc4ahx6e84-algo-1-8m8ve | "num_cpus": 2, kc4ahx6e84-algo-1-8m8ve | "num_gpus": 0, kc4ahx6e84-algo-1-8m8ve | "output_data_dir": "/opt/ml/output/data", kc4ahx6e84-algo-1-8m8ve | "output_dir": "/opt/ml/output", kc4ahx6e84-algo-1-8m8ve | "output_intermediate_dir": "/opt/ml/output/intermediate", kc4ahx6e84-algo-1-8m8ve | "resource_config": { kc4ahx6e84-algo-1-8m8ve | "current_host": "algo-1-8m8ve", kc4ahx6e84-algo-1-8m8ve | "hosts": [ kc4ahx6e84-algo-1-8m8ve | "algo-1-8m8ve" kc4ahx6e84-algo-1-8m8ve | ] kc4ahx6e84-algo-1-8m8ve | }, kc4ahx6e84-algo-1-8m8ve | "user_entry_point": "train_and_serve.py" kc4ahx6e84-algo-1-8m8ve | } kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | Environment variables: kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | SM_HOSTS=["algo-1-8m8ve"] kc4ahx6e84-algo-1-8m8ve | SM_NETWORK_INTERFACE_NAME=eth0 kc4ahx6e84-algo-1-8m8ve | SM_HPS={"dummy_param_1":"val1","dummy_param_2":"val2"} kc4ahx6e84-algo-1-8m8ve | SM_USER_ENTRY_POINT=train_and_serve.py kc4ahx6e84-algo-1-8m8ve | SM_FRAMEWORK_PARAMS={} kc4ahx6e84-algo-1-8m8ve | SM_RESOURCE_CONFIG={"current_host":"algo-1-8m8ve","hosts":["algo-1-8m8ve"]} kc4ahx6e84-algo-1-8m8ve | SM_INPUT_DATA_CONFIG={} kc4ahx6e84-algo-1-8m8ve | SM_OUTPUT_DATA_DIR=/opt/ml/output/data kc4ahx6e84-algo-1-8m8ve | SM_CHANNELS=[] kc4ahx6e84-algo-1-8m8ve | SM_CURRENT_HOST=algo-1-8m8ve kc4ahx6e84-algo-1-8m8ve | SM_MODULE_NAME=train_and_serve kc4ahx6e84-algo-1-8m8ve | SM_LOG_LEVEL=20 kc4ahx6e84-algo-1-8m8ve | SM_FRAMEWORK_MODULE=sagemaker_sklearn_container.training:main kc4ahx6e84-algo-1-8m8ve | SM_INPUT_DIR=/opt/ml/input kc4ahx6e84-algo-1-8m8ve | SM_INPUT_CONFIG_DIR=/opt/ml/input/config kc4ahx6e84-algo-1-8m8ve | SM_OUTPUT_DIR=/opt/ml/output kc4ahx6e84-algo-1-8m8ve | SM_NUM_CPUS=2 kc4ahx6e84-algo-1-8m8ve | SM_NUM_GPUS=0 kc4ahx6e84-algo-1-8m8ve | SM_MODEL_DIR=/opt/ml/model kc4ahx6e84-algo-1-8m8ve | SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-44-284/source/sourcedir.tar.gz kc4ahx6e84-algo-1-8m8ve | SM_TRAINING_ENV={"additional_framework_parameters":{},"channel_input_dirs":{},"current_host":"algo-1-8m8ve","framework_module":"sagemaker_sklearn_container.training:main","hosts":["algo-1-8m8ve"],"hyperparameters":{"dummy_param_1":"val1","dummy_param_2":"val2"},"input_config_dir":"/opt/ml/input/config","input_data_config":{},"input_dir":"/opt/ml/input","is_master":true,"job_name":"sagemaker-scikit-learn-2022-07-17-15-23-44-284","log_level":20,"master_hostname":"algo-1-8m8ve","model_dir":"/opt/ml/model","module_dir":"s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-44-284/source/sourcedir.tar.gz","module_name":"train_and_serve","network_interface_name":"eth0","num_cpus":2,"num_gpus":0,"output_data_dir":"/opt/ml/output/data","output_dir":"/opt/ml/output","output_intermediate_dir":"/opt/ml/output/intermediate","resource_config":{"current_host":"algo-1-8m8ve","hosts":["algo-1-8m8ve"]},"user_entry_point":"train_and_serve.py"} kc4ahx6e84-algo-1-8m8ve | SM_USER_ARGS=["--dummy_param_1","val1","--dummy_param_2","val2"] kc4ahx6e84-algo-1-8m8ve | SM_OUTPUT_INTERMEDIATE_DIR=/opt/ml/output/intermediate kc4ahx6e84-algo-1-8m8ve | SM_HP_DUMMY_PARAM_1=val1 kc4ahx6e84-algo-1-8m8ve | SM_HP_DUMMY_PARAM_2=val2 kc4ahx6e84-algo-1-8m8ve | PYTHONPATH=/opt/ml/code:/miniconda3/bin:/miniconda3/lib/python38.zip:/miniconda3/lib/python3.8:/miniconda3/lib/python3.8/lib-dynload:/miniconda3/lib/python3.8/site-packages kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | Invoking script with the following command: kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | /miniconda3/bin/python train_and_serve.py --dummy_param_1 val1 --dummy_param_2 val2 kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | kc4ahx6e84-algo-1-8m8ve | *** Hello from the SageMaker script mode*** kc4ahx6e84-algo-1-8m8ve | 2022-07-17 15:23:46,657 sagemaker-containers INFO Reporting training SUCCESS kc4ahx6e84-algo-1-8m8ve exited with code 0 Aborting on container exit... ===== Job Complete =====

From the output we can see that our hyperparameters are passed to our training script as command line arguments. This is an important point and we will update our script using this information.

SageMaker SKLearn container environment variables

Let’s now discuss some important environment variables we see in the output.

SM_MODULE_DIR

SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-13-13-05-48-675/source/sourcedir.tar.gzSM_MODULE_DIR points to a location in the S3 bucket where SageMaker will automatically backup our source code for that particular run. SageMaker will create a separate folder in the default bucket for each new run. The default value is s3://sagemaker-{aws-region}-{aws-id}/{training-job-name}/source/sourcedir.tar.gz

Note: We have used local_code for the SKLean estimator, then why is the source code backed up on the S3 bucket. Should it not be backed on the local system and bypass S3 altogether in local mode? Well, this should have been the default behavior, but it looks like SageMaker SDK is doing it otherwise, and even with the local mode it is using the S3 bucket for keeping source code. You can read more about this behavior in this issue ticket Model repack always uploads data to S3 bucket regardless of local mode settings

SM_MODEL_DIR

SM_MODEL_DIR=/opt/ml/modelSM_MODEL_DIR points to a directory located inside the container. When the training job finishes, the container and its file system will be deleted, except for the /opt/ml/model and /opt/ml/output directories. Use /opt/ml/model to save the trained model artifacts. These artifacts are uploaded to S3 for model hosting.

SM_OUTPUT_DATA_DIR

SM_OUTPUT_DIR=/opt/ml/outputSM_OUTPUT_DIR points to a directory in the container to write output artifacts. Output artifacts may include checkpoints, graphs, and other files to save, not including model artifacts. These artifacts are compressed and uploaded to S3 to the same S3 prefix as the model artifacts.

SM_CHANNELS

SM_CHANNELS='["testing","training"]'A channel is a named input source that training algorithms can consume. You can partition your training data into different logical “channels” when you run training. Depending on your problem, some common channel ideas are: “training”, “testing”, “evaluation” or “images” and “labels”. You can read more about the channels from SageMaker API reference Channel

SM CHANNEL {channel_name}

SM_CHANNEL_TRAIN='/opt/ml/input/data/train'

SM_CHANNEL_TEST='/opt/ml/input/data/test'Suppose that you have passed two input channels, ‘train’ and ‘test’, to the Scikit-learn estimator’s fit() method, the following will be set, following the format SM_CHANNEL_[channel_name]: * SM_CHANNEL_TRAIN: it points to the directory in the container that has the train channel data downloaded * SM_CHANNEL_TEST: Same as above, but for the test channel

Note that the channel names train and test are the conventions. Still, you can use any name here, and the environment variables will be created accordingly. It is important to know that the SageMaker container automatically downloads the data from the provided input channels and makes them available in the respective local directories once it starts executing. The training script can then load the data from the local container directories.

There are more environment variables available, and you can read about them from Environment variables

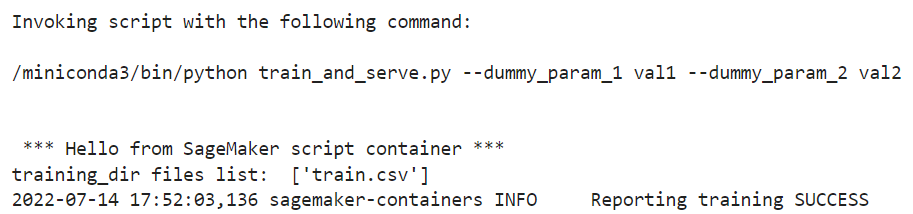

Pass input channel to SKLearn estimator

Now that we understand the SKLearn container environment more let’s pass the training data channel to the estimator and see if the data becomes available inside the container directory.

Update our script to list all the files in the SM_CHANNEL_TRAIN directory.

%%writefile $script_file

import argparse, os, sys

if __name__ == "__main__":

print(" *** Hello from SageMaker script container *** ")

training_dir = os.environ.get("SM_CHANNEL_TRAIN")

dir_list = os.listdir(training_dir)

print("training_dir files list: ", dir_list)Overwriting ./datasets/2022-07-07-sagemaker-script-mode/src/train_and_serve.py#collapse-output

sk_estimator = SKLearn(

entry_point=script_file,

role=role,

instance_count=1,

instance_type='local',

framework_version="1.0-1",

hyperparameters={"dummy_param_1":"val1","dummy_param_2":"val2"},

)

sk_estimator.fit({"train": f"file://{local_train_path}"})Creating wp2g5fxyg1-algo-1-o05g1 ... Creating wp2g5fxyg1-algo-1-o05g1 ... done Attaching to wp2g5fxyg1-algo-1-o05g1 wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,444 sagemaker-containers INFO Imported framework sagemaker_sklearn_container.training wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,447 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,456 sagemaker_sklearn_container.training INFO Invoking user training script. wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,638 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,653 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,667 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,676 sagemaker-training-toolkit INFO Invoking user script wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | Training Env: wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | { wp2g5fxyg1-algo-1-o05g1 | "additional_framework_parameters": {}, wp2g5fxyg1-algo-1-o05g1 | "channel_input_dirs": { wp2g5fxyg1-algo-1-o05g1 | "train": "/opt/ml/input/data/train" wp2g5fxyg1-algo-1-o05g1 | }, wp2g5fxyg1-algo-1-o05g1 | "current_host": "algo-1-o05g1", wp2g5fxyg1-algo-1-o05g1 | "framework_module": "sagemaker_sklearn_container.training:main", wp2g5fxyg1-algo-1-o05g1 | "hosts": [ wp2g5fxyg1-algo-1-o05g1 | "algo-1-o05g1" wp2g5fxyg1-algo-1-o05g1 | ], wp2g5fxyg1-algo-1-o05g1 | "hyperparameters": { wp2g5fxyg1-algo-1-o05g1 | "dummy_param_1": "val1", wp2g5fxyg1-algo-1-o05g1 | "dummy_param_2": "val2" wp2g5fxyg1-algo-1-o05g1 | }, wp2g5fxyg1-algo-1-o05g1 | "input_config_dir": "/opt/ml/input/config", wp2g5fxyg1-algo-1-o05g1 | "input_data_config": { wp2g5fxyg1-algo-1-o05g1 | "train": { wp2g5fxyg1-algo-1-o05g1 | "TrainingInputMode": "File" wp2g5fxyg1-algo-1-o05g1 | } wp2g5fxyg1-algo-1-o05g1 | }, wp2g5fxyg1-algo-1-o05g1 | "input_dir": "/opt/ml/input", wp2g5fxyg1-algo-1-o05g1 | "is_master": true, wp2g5fxyg1-algo-1-o05g1 | "job_name": "sagemaker-scikit-learn-2022-07-17-15-23-47-051", wp2g5fxyg1-algo-1-o05g1 | "log_level": 20, wp2g5fxyg1-algo-1-o05g1 | "master_hostname": "algo-1-o05g1", wp2g5fxyg1-algo-1-o05g1 | "model_dir": "/opt/ml/model", wp2g5fxyg1-algo-1-o05g1 | "module_dir": "s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-47-051/source/sourcedir.tar.gz", wp2g5fxyg1-algo-1-o05g1 | "module_name": "train_and_serve", wp2g5fxyg1-algo-1-o05g1 | "network_interface_name": "eth0", wp2g5fxyg1-algo-1-o05g1 | "num_cpus": 2, wp2g5fxyg1-algo-1-o05g1 | "num_gpus": 0, wp2g5fxyg1-algo-1-o05g1 | "output_data_dir": "/opt/ml/output/data", wp2g5fxyg1-algo-1-o05g1 | "output_dir": "/opt/ml/output", wp2g5fxyg1-algo-1-o05g1 | "output_intermediate_dir": "/opt/ml/output/intermediate", wp2g5fxyg1-algo-1-o05g1 | "resource_config": { wp2g5fxyg1-algo-1-o05g1 | "current_host": "algo-1-o05g1", wp2g5fxyg1-algo-1-o05g1 | "hosts": [ wp2g5fxyg1-algo-1-o05g1 | "algo-1-o05g1" wp2g5fxyg1-algo-1-o05g1 | ] wp2g5fxyg1-algo-1-o05g1 | }, wp2g5fxyg1-algo-1-o05g1 | "user_entry_point": "train_and_serve.py" wp2g5fxyg1-algo-1-o05g1 | } wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | Environment variables: wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | SM_HOSTS=["algo-1-o05g1"] wp2g5fxyg1-algo-1-o05g1 | SM_NETWORK_INTERFACE_NAME=eth0 wp2g5fxyg1-algo-1-o05g1 | SM_HPS={"dummy_param_1":"val1","dummy_param_2":"val2"} wp2g5fxyg1-algo-1-o05g1 | SM_USER_ENTRY_POINT=train_and_serve.py wp2g5fxyg1-algo-1-o05g1 | SM_FRAMEWORK_PARAMS={} wp2g5fxyg1-algo-1-o05g1 | SM_RESOURCE_CONFIG={"current_host":"algo-1-o05g1","hosts":["algo-1-o05g1"]} wp2g5fxyg1-algo-1-o05g1 | SM_INPUT_DATA_CONFIG={"train":{"TrainingInputMode":"File"}} wp2g5fxyg1-algo-1-o05g1 | SM_OUTPUT_DATA_DIR=/opt/ml/output/data wp2g5fxyg1-algo-1-o05g1 | SM_CHANNELS=["train"] wp2g5fxyg1-algo-1-o05g1 | SM_CURRENT_HOST=algo-1-o05g1 wp2g5fxyg1-algo-1-o05g1 | SM_MODULE_NAME=train_and_serve wp2g5fxyg1-algo-1-o05g1 | SM_LOG_LEVEL=20 wp2g5fxyg1-algo-1-o05g1 | SM_FRAMEWORK_MODULE=sagemaker_sklearn_container.training:main wp2g5fxyg1-algo-1-o05g1 | SM_INPUT_DIR=/opt/ml/input wp2g5fxyg1-algo-1-o05g1 | SM_INPUT_CONFIG_DIR=/opt/ml/input/config wp2g5fxyg1-algo-1-o05g1 | SM_OUTPUT_DIR=/opt/ml/output wp2g5fxyg1-algo-1-o05g1 | SM_NUM_CPUS=2 wp2g5fxyg1-algo-1-o05g1 | SM_NUM_GPUS=0 wp2g5fxyg1-algo-1-o05g1 | SM_MODEL_DIR=/opt/ml/model wp2g5fxyg1-algo-1-o05g1 | SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-47-051/source/sourcedir.tar.gz wp2g5fxyg1-algo-1-o05g1 | SM_TRAINING_ENV={"additional_framework_parameters":{},"channel_input_dirs":{"train":"/opt/ml/input/data/train"},"current_host":"algo-1-o05g1","framework_module":"sagemaker_sklearn_container.training:main","hosts":["algo-1-o05g1"],"hyperparameters":{"dummy_param_1":"val1","dummy_param_2":"val2"},"input_config_dir":"/opt/ml/input/config","input_data_config":{"train":{"TrainingInputMode":"File"}},"input_dir":"/opt/ml/input","is_master":true,"job_name":"sagemaker-scikit-learn-2022-07-17-15-23-47-051","log_level":20,"master_hostname":"algo-1-o05g1","model_dir":"/opt/ml/model","module_dir":"s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-47-051/source/sourcedir.tar.gz","module_name":"train_and_serve","network_interface_name":"eth0","num_cpus":2,"num_gpus":0,"output_data_dir":"/opt/ml/output/data","output_dir":"/opt/ml/output","output_intermediate_dir":"/opt/ml/output/intermediate","resource_config":{"current_host":"algo-1-o05g1","hosts":["algo-1-o05g1"]},"user_entry_point":"train_and_serve.py"} wp2g5fxyg1-algo-1-o05g1 | SM_USER_ARGS=["--dummy_param_1","val1","--dummy_param_2","val2"] wp2g5fxyg1-algo-1-o05g1 | SM_OUTPUT_INTERMEDIATE_DIR=/opt/ml/output/intermediate wp2g5fxyg1-algo-1-o05g1 | SM_CHANNEL_TRAIN=/opt/ml/input/data/train wp2g5fxyg1-algo-1-o05g1 | SM_HP_DUMMY_PARAM_1=val1 wp2g5fxyg1-algo-1-o05g1 | SM_HP_DUMMY_PARAM_2=val2 wp2g5fxyg1-algo-1-o05g1 | PYTHONPATH=/opt/ml/code:/miniconda3/bin:/miniconda3/lib/python38.zip:/miniconda3/lib/python3.8:/miniconda3/lib/python3.8/lib-dynload:/miniconda3/lib/python3.8/site-packages wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | Invoking script with the following command: wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | /miniconda3/bin/python train_and_serve.py --dummy_param_1 val1 --dummy_param_2 val2 wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | wp2g5fxyg1-algo-1-o05g1 | *** Hello from SageMaker script container *** wp2g5fxyg1-algo-1-o05g1 | training_dir files list: ['train.csv'] wp2g5fxyg1-algo-1-o05g1 | 2022-07-17 15:23:49,715 sagemaker-containers INFO Reporting training SUCCESS wp2g5fxyg1-algo-1-o05g1 exited with code 0 Aborting on container exit... ===== Job Complete =====

From the output, we can see that train.csv, which was in our local environment, is now available inside the container on path SM_CHANNEL_TRAIN=/opt/ml/input/data/train.

Let’s also test the same with our training data on the S3 bucket.

#collapse-output

sk_estimator = SKLearn(

entry_point=script_file,

role=role,

instance_count=1,

instance_type='local',

framework_version="1.0-1",

hyperparameters={"dummy_param_1":"val1","dummy_param_2":"val2"},

)

sk_estimator.fit({"train": s3_train_uri})Creating 7ao431iiu5-algo-1-9jid1 ... Creating 7ao431iiu5-algo-1-9jid1 ... done Attaching to 7ao431iiu5-algo-1-9jid1 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,073 sagemaker-containers INFO Imported framework sagemaker_sklearn_container.training 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,079 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,094 sagemaker_sklearn_container.training INFO Invoking user training script. 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,335 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,348 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,360 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,369 sagemaker-training-toolkit INFO Invoking user script 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | Training Env: 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | { 7ao431iiu5-algo-1-9jid1 | "additional_framework_parameters": {}, 7ao431iiu5-algo-1-9jid1 | "channel_input_dirs": { 7ao431iiu5-algo-1-9jid1 | "train": "/opt/ml/input/data/train" 7ao431iiu5-algo-1-9jid1 | }, 7ao431iiu5-algo-1-9jid1 | "current_host": "algo-1-9jid1", 7ao431iiu5-algo-1-9jid1 | "framework_module": "sagemaker_sklearn_container.training:main", 7ao431iiu5-algo-1-9jid1 | "hosts": [ 7ao431iiu5-algo-1-9jid1 | "algo-1-9jid1" 7ao431iiu5-algo-1-9jid1 | ], 7ao431iiu5-algo-1-9jid1 | "hyperparameters": { 7ao431iiu5-algo-1-9jid1 | "dummy_param_1": "val1", 7ao431iiu5-algo-1-9jid1 | "dummy_param_2": "val2" 7ao431iiu5-algo-1-9jid1 | }, 7ao431iiu5-algo-1-9jid1 | "input_config_dir": "/opt/ml/input/config", 7ao431iiu5-algo-1-9jid1 | "input_data_config": { 7ao431iiu5-algo-1-9jid1 | "train": { 7ao431iiu5-algo-1-9jid1 | "TrainingInputMode": "File" 7ao431iiu5-algo-1-9jid1 | } 7ao431iiu5-algo-1-9jid1 | }, 7ao431iiu5-algo-1-9jid1 | "input_dir": "/opt/ml/input", 7ao431iiu5-algo-1-9jid1 | "is_master": true, 7ao431iiu5-algo-1-9jid1 | "job_name": "sagemaker-scikit-learn-2022-07-17-15-23-50-077", 7ao431iiu5-algo-1-9jid1 | "log_level": 20, 7ao431iiu5-algo-1-9jid1 | "master_hostname": "algo-1-9jid1", 7ao431iiu5-algo-1-9jid1 | "model_dir": "/opt/ml/model", 7ao431iiu5-algo-1-9jid1 | "module_dir": "s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-50-077/source/sourcedir.tar.gz", 7ao431iiu5-algo-1-9jid1 | "module_name": "train_and_serve", 7ao431iiu5-algo-1-9jid1 | "network_interface_name": "eth0", 7ao431iiu5-algo-1-9jid1 | "num_cpus": 2, 7ao431iiu5-algo-1-9jid1 | "num_gpus": 0, 7ao431iiu5-algo-1-9jid1 | "output_data_dir": "/opt/ml/output/data", 7ao431iiu5-algo-1-9jid1 | "output_dir": "/opt/ml/output", 7ao431iiu5-algo-1-9jid1 | "output_intermediate_dir": "/opt/ml/output/intermediate", 7ao431iiu5-algo-1-9jid1 | "resource_config": { 7ao431iiu5-algo-1-9jid1 | "current_host": "algo-1-9jid1", 7ao431iiu5-algo-1-9jid1 | "hosts": [ 7ao431iiu5-algo-1-9jid1 | "algo-1-9jid1" 7ao431iiu5-algo-1-9jid1 | ] 7ao431iiu5-algo-1-9jid1 | }, 7ao431iiu5-algo-1-9jid1 | "user_entry_point": "train_and_serve.py" 7ao431iiu5-algo-1-9jid1 | } 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | Environment variables: 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | SM_HOSTS=["algo-1-9jid1"] 7ao431iiu5-algo-1-9jid1 | SM_NETWORK_INTERFACE_NAME=eth0 7ao431iiu5-algo-1-9jid1 | SM_HPS={"dummy_param_1":"val1","dummy_param_2":"val2"} 7ao431iiu5-algo-1-9jid1 | SM_USER_ENTRY_POINT=train_and_serve.py 7ao431iiu5-algo-1-9jid1 | SM_FRAMEWORK_PARAMS={} 7ao431iiu5-algo-1-9jid1 | SM_RESOURCE_CONFIG={"current_host":"algo-1-9jid1","hosts":["algo-1-9jid1"]} 7ao431iiu5-algo-1-9jid1 | SM_INPUT_DATA_CONFIG={"train":{"TrainingInputMode":"File"}} 7ao431iiu5-algo-1-9jid1 | SM_OUTPUT_DATA_DIR=/opt/ml/output/data 7ao431iiu5-algo-1-9jid1 | SM_CHANNELS=["train"] 7ao431iiu5-algo-1-9jid1 | SM_CURRENT_HOST=algo-1-9jid1 7ao431iiu5-algo-1-9jid1 | SM_MODULE_NAME=train_and_serve 7ao431iiu5-algo-1-9jid1 | SM_LOG_LEVEL=20 7ao431iiu5-algo-1-9jid1 | SM_FRAMEWORK_MODULE=sagemaker_sklearn_container.training:main 7ao431iiu5-algo-1-9jid1 | SM_INPUT_DIR=/opt/ml/input 7ao431iiu5-algo-1-9jid1 | SM_INPUT_CONFIG_DIR=/opt/ml/input/config 7ao431iiu5-algo-1-9jid1 | SM_OUTPUT_DIR=/opt/ml/output 7ao431iiu5-algo-1-9jid1 | SM_NUM_CPUS=2 7ao431iiu5-algo-1-9jid1 | SM_NUM_GPUS=0 7ao431iiu5-algo-1-9jid1 | SM_MODEL_DIR=/opt/ml/model 7ao431iiu5-algo-1-9jid1 | SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-50-077/source/sourcedir.tar.gz 7ao431iiu5-algo-1-9jid1 | SM_TRAINING_ENV={"additional_framework_parameters":{},"channel_input_dirs":{"train":"/opt/ml/input/data/train"},"current_host":"algo-1-9jid1","framework_module":"sagemaker_sklearn_container.training:main","hosts":["algo-1-9jid1"],"hyperparameters":{"dummy_param_1":"val1","dummy_param_2":"val2"},"input_config_dir":"/opt/ml/input/config","input_data_config":{"train":{"TrainingInputMode":"File"}},"input_dir":"/opt/ml/input","is_master":true,"job_name":"sagemaker-scikit-learn-2022-07-17-15-23-50-077","log_level":20,"master_hostname":"algo-1-9jid1","model_dir":"/opt/ml/model","module_dir":"s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-50-077/source/sourcedir.tar.gz","module_name":"train_and_serve","network_interface_name":"eth0","num_cpus":2,"num_gpus":0,"output_data_dir":"/opt/ml/output/data","output_dir":"/opt/ml/output","output_intermediate_dir":"/opt/ml/output/intermediate","resource_config":{"current_host":"algo-1-9jid1","hosts":["algo-1-9jid1"]},"user_entry_point":"train_and_serve.py"} 7ao431iiu5-algo-1-9jid1 | SM_USER_ARGS=["--dummy_param_1","val1","--dummy_param_2","val2"] 7ao431iiu5-algo-1-9jid1 | SM_OUTPUT_INTERMEDIATE_DIR=/opt/ml/output/intermediate 7ao431iiu5-algo-1-9jid1 | SM_CHANNEL_TRAIN=/opt/ml/input/data/train 7ao431iiu5-algo-1-9jid1 | SM_HP_DUMMY_PARAM_1=val1 7ao431iiu5-algo-1-9jid1 | SM_HP_DUMMY_PARAM_2=val2 7ao431iiu5-algo-1-9jid1 | PYTHONPATH=/opt/ml/code:/miniconda3/bin:/miniconda3/lib/python38.zip:/miniconda3/lib/python3.8:/miniconda3/lib/python3.8/lib-dynload:/miniconda3/lib/python3.8/site-packages 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | Invoking script with the following command: 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | /miniconda3/bin/python train_and_serve.py --dummy_param_1 val1 --dummy_param_2 val2 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | 7ao431iiu5-algo-1-9jid1 | *** Hello from SageMaker script container *** 7ao431iiu5-algo-1-9jid1 | training_dir files list: ['train.csv'] 7ao431iiu5-algo-1-9jid1 | 2022-07-17 15:23:53,409 sagemaker-containers INFO Reporting training SUCCESS 7ao431iiu5-algo-1-9jid1 exited with code 0 Aborting on container exit... ===== Job Complete =====

Again the results are the same. SageMaker will download the data from the S3 bucket and make it available in the container. In the environment variables section we also learned that two directories are special /opt/ml/model and /opt/ml/output. Container environment variables SM_MODEL_DIR and SM_OUTPUT_DATA_DIR point to them, respectively. Whatever artifacts we put on them will be stored on the S3 bucket when the training job finishes. “SM_MODEL_DIR” is for trained models, and “SM_OUTPUT_DATA_DIR” is for other artifacts like logs, graphs, plots, results, etc. Let’s update our training script and put some dummy data in these directories. Once the job is complete, we will verify the stored artifacts on the S3 bucket.

%%writefile $script_file

import argparse, os, sys

if __name__ == "__main__":

print(" *** Hello from SageMaker script container *** ")

# list files in SM_CHANNEL_TRAIN

training_dir = os.environ.get("SM_CHANNEL_TRAIN")

dir_list = os.listdir(training_dir)

print("training_dir files list: ", dir_list)

# write dummy model file to SM_MODEL_DIR

sm_model_dir = os.environ.get("SM_MODEL_DIR")

with open(f"{sm_model_dir}/dummy-model.txt", "w") as f:

f.write("this is a dummy model")

# write dummy artifact file to SM_OUTPUT_DATA_DIR

sm_output_data_dir = os.environ.get("SM_OUTPUT_DATA_DIR")

with open(f"{sm_output_data_dir}/dummy-output-data.txt", "w") as f:

f.write("this is a dummy output data")Overwriting ./datasets/2022-07-07-sagemaker-script-mode/src/train_and_serve.py#collapse-output

sk_estimator = SKLearn(

entry_point=script_file,

role=role,

instance_count=1,

instance_type='local',

framework_version="1.0-1",

hyperparameters={"dummy_param_1":"val1","dummy_param_2":"val2"},

)

sk_estimator.fit({"train": s3_train_uri})Creating c30093mavu-algo-1-p87y9 ... Creating c30093mavu-algo-1-p87y9 ... done Attaching to c30093mavu-algo-1-p87y9 c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,051 sagemaker-containers INFO Imported framework sagemaker_sklearn_container.training c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,055 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,065 sagemaker_sklearn_container.training INFO Invoking user training script. c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,251 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,267 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,281 sagemaker-training-toolkit INFO No GPUs detected (normal if no gpus installed) c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,291 sagemaker-training-toolkit INFO Invoking user script c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | Training Env: c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | { c30093mavu-algo-1-p87y9 | "additional_framework_parameters": {}, c30093mavu-algo-1-p87y9 | "channel_input_dirs": { c30093mavu-algo-1-p87y9 | "train": "/opt/ml/input/data/train" c30093mavu-algo-1-p87y9 | }, c30093mavu-algo-1-p87y9 | "current_host": "algo-1-p87y9", c30093mavu-algo-1-p87y9 | "framework_module": "sagemaker_sklearn_container.training:main", c30093mavu-algo-1-p87y9 | "hosts": [ c30093mavu-algo-1-p87y9 | "algo-1-p87y9" c30093mavu-algo-1-p87y9 | ], c30093mavu-algo-1-p87y9 | "hyperparameters": { c30093mavu-algo-1-p87y9 | "dummy_param_1": "val1", c30093mavu-algo-1-p87y9 | "dummy_param_2": "val2" c30093mavu-algo-1-p87y9 | }, c30093mavu-algo-1-p87y9 | "input_config_dir": "/opt/ml/input/config", c30093mavu-algo-1-p87y9 | "input_data_config": { c30093mavu-algo-1-p87y9 | "train": { c30093mavu-algo-1-p87y9 | "TrainingInputMode": "File" c30093mavu-algo-1-p87y9 | } c30093mavu-algo-1-p87y9 | }, c30093mavu-algo-1-p87y9 | "input_dir": "/opt/ml/input", c30093mavu-algo-1-p87y9 | "is_master": true, c30093mavu-algo-1-p87y9 | "job_name": "sagemaker-scikit-learn-2022-07-17-15-23-53-775", c30093mavu-algo-1-p87y9 | "log_level": 20, c30093mavu-algo-1-p87y9 | "master_hostname": "algo-1-p87y9", c30093mavu-algo-1-p87y9 | "model_dir": "/opt/ml/model", c30093mavu-algo-1-p87y9 | "module_dir": "s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/source/sourcedir.tar.gz", c30093mavu-algo-1-p87y9 | "module_name": "train_and_serve", c30093mavu-algo-1-p87y9 | "network_interface_name": "eth0", c30093mavu-algo-1-p87y9 | "num_cpus": 2, c30093mavu-algo-1-p87y9 | "num_gpus": 0, c30093mavu-algo-1-p87y9 | "output_data_dir": "/opt/ml/output/data", c30093mavu-algo-1-p87y9 | "output_dir": "/opt/ml/output", c30093mavu-algo-1-p87y9 | "output_intermediate_dir": "/opt/ml/output/intermediate", c30093mavu-algo-1-p87y9 | "resource_config": { c30093mavu-algo-1-p87y9 | "current_host": "algo-1-p87y9", c30093mavu-algo-1-p87y9 | "hosts": [ c30093mavu-algo-1-p87y9 | "algo-1-p87y9" c30093mavu-algo-1-p87y9 | ] c30093mavu-algo-1-p87y9 | }, c30093mavu-algo-1-p87y9 | "user_entry_point": "train_and_serve.py" c30093mavu-algo-1-p87y9 | } c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | Environment variables: c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | SM_HOSTS=["algo-1-p87y9"] c30093mavu-algo-1-p87y9 | SM_NETWORK_INTERFACE_NAME=eth0 c30093mavu-algo-1-p87y9 | SM_HPS={"dummy_param_1":"val1","dummy_param_2":"val2"} c30093mavu-algo-1-p87y9 | SM_USER_ENTRY_POINT=train_and_serve.py c30093mavu-algo-1-p87y9 | SM_FRAMEWORK_PARAMS={} c30093mavu-algo-1-p87y9 | SM_RESOURCE_CONFIG={"current_host":"algo-1-p87y9","hosts":["algo-1-p87y9"]} c30093mavu-algo-1-p87y9 | SM_INPUT_DATA_CONFIG={"train":{"TrainingInputMode":"File"}} c30093mavu-algo-1-p87y9 | SM_OUTPUT_DATA_DIR=/opt/ml/output/data c30093mavu-algo-1-p87y9 | SM_CHANNELS=["train"] c30093mavu-algo-1-p87y9 | SM_CURRENT_HOST=algo-1-p87y9 c30093mavu-algo-1-p87y9 | SM_MODULE_NAME=train_and_serve c30093mavu-algo-1-p87y9 | SM_LOG_LEVEL=20 c30093mavu-algo-1-p87y9 | SM_FRAMEWORK_MODULE=sagemaker_sklearn_container.training:main c30093mavu-algo-1-p87y9 | SM_INPUT_DIR=/opt/ml/input c30093mavu-algo-1-p87y9 | SM_INPUT_CONFIG_DIR=/opt/ml/input/config c30093mavu-algo-1-p87y9 | SM_OUTPUT_DIR=/opt/ml/output c30093mavu-algo-1-p87y9 | SM_NUM_CPUS=2 c30093mavu-algo-1-p87y9 | SM_NUM_GPUS=0 c30093mavu-algo-1-p87y9 | SM_MODEL_DIR=/opt/ml/model c30093mavu-algo-1-p87y9 | SM_MODULE_DIR=s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/source/sourcedir.tar.gz c30093mavu-algo-1-p87y9 | SM_TRAINING_ENV={"additional_framework_parameters":{},"channel_input_dirs":{"train":"/opt/ml/input/data/train"},"current_host":"algo-1-p87y9","framework_module":"sagemaker_sklearn_container.training:main","hosts":["algo-1-p87y9"],"hyperparameters":{"dummy_param_1":"val1","dummy_param_2":"val2"},"input_config_dir":"/opt/ml/input/config","input_data_config":{"train":{"TrainingInputMode":"File"}},"input_dir":"/opt/ml/input","is_master":true,"job_name":"sagemaker-scikit-learn-2022-07-17-15-23-53-775","log_level":20,"master_hostname":"algo-1-p87y9","model_dir":"/opt/ml/model","module_dir":"s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/source/sourcedir.tar.gz","module_name":"train_and_serve","network_interface_name":"eth0","num_cpus":2,"num_gpus":0,"output_data_dir":"/opt/ml/output/data","output_dir":"/opt/ml/output","output_intermediate_dir":"/opt/ml/output/intermediate","resource_config":{"current_host":"algo-1-p87y9","hosts":["algo-1-p87y9"]},"user_entry_point":"train_and_serve.py"} c30093mavu-algo-1-p87y9 | SM_USER_ARGS=["--dummy_param_1","val1","--dummy_param_2","val2"] c30093mavu-algo-1-p87y9 | SM_OUTPUT_INTERMEDIATE_DIR=/opt/ml/output/intermediate c30093mavu-algo-1-p87y9 | SM_CHANNEL_TRAIN=/opt/ml/input/data/train c30093mavu-algo-1-p87y9 | SM_HP_DUMMY_PARAM_1=val1 c30093mavu-algo-1-p87y9 | SM_HP_DUMMY_PARAM_2=val2 c30093mavu-algo-1-p87y9 | PYTHONPATH=/opt/ml/code:/miniconda3/bin:/miniconda3/lib/python38.zip:/miniconda3/lib/python3.8:/miniconda3/lib/python3.8/lib-dynload:/miniconda3/lib/python3.8/site-packages c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | Invoking script with the following command: c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | /miniconda3/bin/python train_and_serve.py --dummy_param_1 val1 --dummy_param_2 val2 c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | c30093mavu-algo-1-p87y9 | *** Hello from SageMaker script container *** c30093mavu-algo-1-p87y9 | training_dir files list: ['train.csv'] c30093mavu-algo-1-p87y9 | 2022-07-17 15:23:56,328 sagemaker-containers INFO Reporting training SUCCESS c30093mavu-algo-1-p87y9 exited with code 0 Aborting on container exit...

Failed to delete: /tmp/tmpuwvrle8_/algo-1-p87y9 Please remove it manually.===== Job Complete =====Our training job is now complete. Let us check the S3 bucket to see if our dummy model and other artifacts are present.

First, we need the S3 URI for these artifacts. For our dummy model (from SM_MODEL_DIR), we can use our estimator object to get the URI.

's3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/model.tar.gz'Let’s download model_data from S3 to a local directory for verification. For this create a local /tmp to store these downloaded files.

local_tmp_path = local_path + "/tmp"

print(local_tmp_path)

# create the local '/tmp' directory

Path(local_tmp_path).mkdir(parents=True, exist_ok=True)./datasets/2022-07-07-sagemaker-script-mode/tmpWe will use SageMaker S3Downloader object to download the model file.

File is downloaded. Let’s uncompress it to verify the model file.

Yes, the “dummy-model.txt” file is present. This tells us that SageMaker will automatically upload the files from the model directory (SM_MODEL_DIR) to the S3 bucket. Let’s do the same for the output data directory (SM_OUTPUT_DATA_DIR). There is no direct way to get the S3 URI from the estimator object for the output data directory. But we can prepare it ourselves. So let’s do that next.

print("estimator.output_path: ", sk_estimator.output_path)

print("estimator.latest_training_job.name: ", sk_estimator.latest_training_job.name)estimator.output_path: s3://sagemaker-us-east-1-801598032724/

estimator.latest_training_job.name: sagemaker-scikit-learn-2022-07-17-15-23-53-775def get_s3_output_uri(estimator):

return estimator.output_path + estimator.latest_training_job.name

get_s3_output_uri(sk_estimator)'s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775'##

# S3 URI for output data artifacts

s3_output_uri = get_s3_output_uri(sk_estimator) + '/output.tar.gz'

s3_output_uri's3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/output.tar.gz'##

# S3 URI for model artifact. We have already veirifed it.

s3_model_uri = get_s3_output_uri(sk_estimator) + '/model.tar.gz'

s3_model_uri's3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/model.tar.gz'##

# S3 URI for source code

s3_source_uri = get_s3_output_uri(sk_estimator) + '/source/sourcedir.tar.gz'

s3_source_uri's3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/source/sourcedir.tar.gz'Let’s download these artifacts to our local ‘/tmp’ directory for verification.

download: s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/output.tar.gz to datasets/2022-07-07-sagemaker-script-mode/tmp/output.tar.gz

download: s3://sagemaker-us-east-1-801598032724/sagemaker-scikit-learn-2022-07-17-15-23-53-775/source/sourcedir.tar.gz to datasets/2022-07-07-sagemaker-script-mode/tmp/sourcedir.tar.gzSummary till now

Let’s summarize what we have learned till now. * We can use SageMaker SKLearn local mode to test our code in a local environment * SKLearn container executes our provided script with the command /miniconda3/bin/python train_and_server.py * Hyperparameters passed to the container are passed to our script as command line arguments * Data from input channels will be downloaded by the container and made available for our script to load and process * ‘/opt/ml/model’ and ‘/opt/ml/output’ directories are special. Anything stored on them will be automatically backed up on the S3 bucket when the job finishes. These directories are defined in the container environment variables ‘SM_MODEL_DIR’ and ‘SM_OUTPUT_DATA_DIR’, respectively. SM_MODEL_DIR should be used to write model artifacts. SM_OUTPUT_DATA_DIR should be used to write any other supporting artifact.

Let’s use this knowledge to update our script to train a RandomForrestClassifier on the Iris flower dataset.

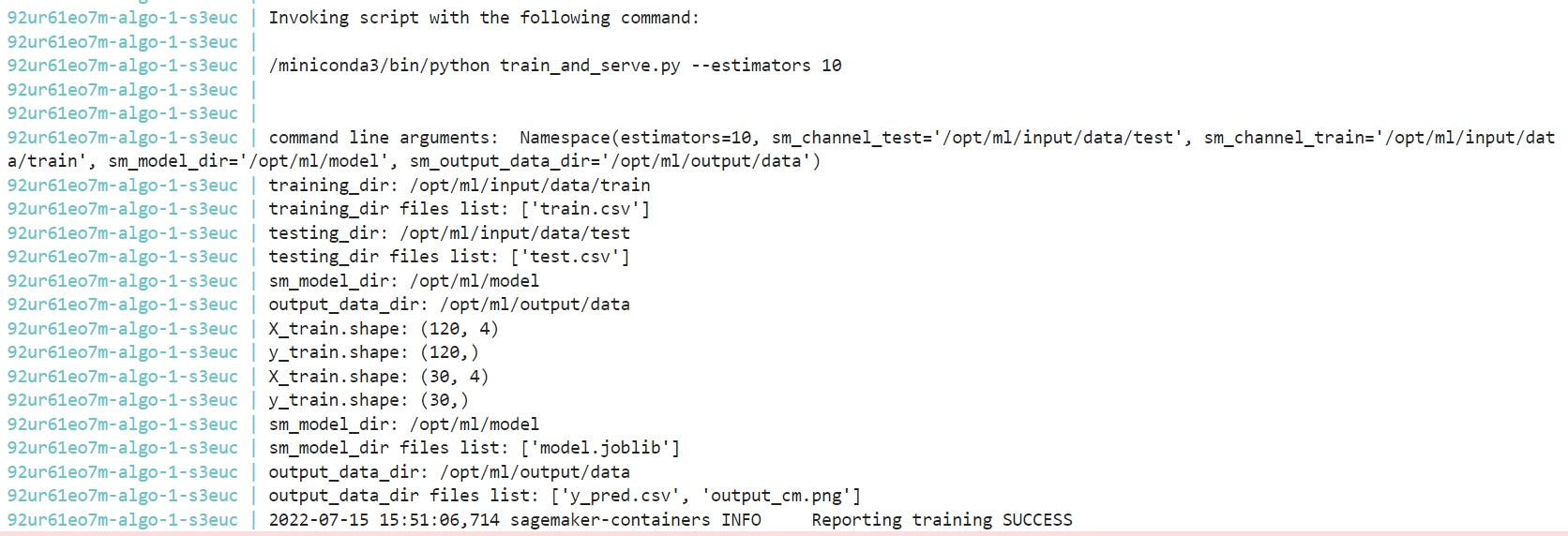

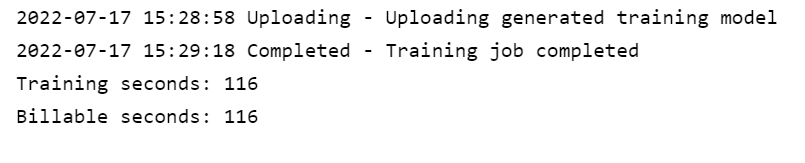

Prepare training script for RandomForestClassifier