aws-cli/1.22.42 Python/3.8.10 Linux/4.14.281-212.502.amzn2.x86_64 botocore/1.23.42Loading SageMaker Linear Learner Model with Apache MXNet in Python

About

You have trained a model with Amazon SageMaker’s built-in algorithm Linear Learner. You can test this model by deploying it on a SageMaker endpoint. But you want to test this model in your local environment. In this post, we will learn to use Apache MXNet and Gluon API to load the model in a local environment, extract its parameters, and perform predictions.

Introduction

Apache MXNet is a fully featured, flexibly programmable, and ultra-scalable deep learning framework supporting state of the art in deep learning models, including convolutional neural networks (CNNs) and long short-term memory networks (LSTMs). Amazon has selected MXNet as their deep learning framework of choice (see Amazon CTO, Werner Vogels blog post on this). When you train a deep learning model using Amazon SageMaker builtin algorithm then there are high chances that the model has been trained and saved using MXNet framework. If a model has been saved with MXNet then we can use the same library to load that model in a local environment.

In my last post Demystifying Amazon SageMaker Training for scikit-learn Lovers, I used SageMaker builtin Linear Learner algorithm to train a model on Boston housing dataset. Once the training was complete the model artifacts were stored on the S3 bucket at the following location

s3://sagemaker-us-east-1-801598032724/2022-06-08-sagemaker-training-overview/output/linear-learner-2022-06-16-09-04-57-576/output/model.tar.gzNote that Amazon Linear Learner is built using a Neural Network and is different from scikit-learn linear regression algorithm. Linear Learner documentation does not provide details on the architecture of this neural network but it does mention that it trains using a distributed implementation of stochastic gradient descent (SGD). We can also specify the hyperparameters such as momentum, learning rate, and the learning rate schedule. Also, note that not all SageMaker built-in models are using deep learning e.g. XGBoost which is based on regression trees. If you have trained xgboost model then to load this model in a local environment you will have to use xgboost library, and the MXNet library will not work for it.

Since Linear Learner is based on deep learning, We can use MXNet Gluon API to load this model in our local environment and make some predictions.

This post assumes that you have already trained a Linear Learner model and its artifacts are available on the S3 bucket. If you have not done so then you may use my another post to train a Linear Learner on the Boston housing dataset.

Environment

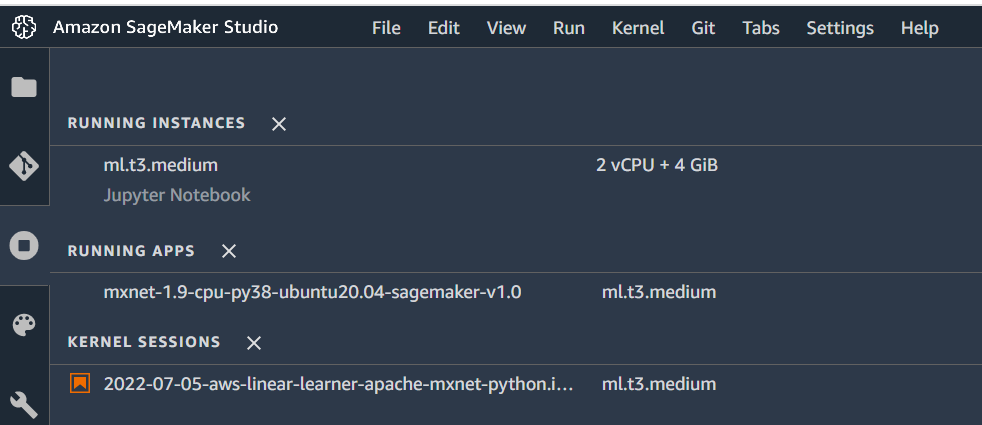

This notebook is prepared with Amazon SageMaker Studio using Python 3 (MXNet 1.9 Python 3.8 CPU Optimized) Kernel and ml.t3.medium instance.

NAME="Ubuntu"

VERSION="20.04.3 LTS (Focal Fossa)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.3 LTS"

VERSION_ID="20.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=focal

UBUNTU_CODENAME=focalLoading a SageMaker Linear Learner model with Apache MXNet in Python

Let’s initialize SageMaker API session.

import sagemaker

session = sagemaker.Session()

role = sagemaker.get_execution_role()

bucket = session.default_bucket()

region = session.boto_region_name

print(f"sagemaker.__version__: {sagemaker.__version__}")

print(f"Session: {session}")

print(f"Role: {role}")

print(f"Bucket: {bucket}")

print(f"Region: {region}")sagemaker.__version__: 2.73.0

Session: <sagemaker.session.Session object at 0x7f6b0500a760>

Role: arn:aws:iam::801598032724:role/service-role/AmazonSageMaker-ExecutionRole-20220516T161743

Bucket: sagemaker-us-east-1-801598032724

Region: us-east-1We have our trained model artifacts available on S3 bucket. Let’s define that bucket path.

We will use SageMaker SDK to download model artifacts from the S3 bucket to a local directory. Let’s define the local path.

Download the model artifacts.

Once downloaded, you will find an archive “model.tar.gz” in the local directory. Let’s extract this file.

Extracting once will give us a zip file. Let’s unzip it to get the model contents

Archive: datasets/2022-07-05-aws-linear-learner-apache-mxnet-python//model_algo-1

extracting: datasets/2022-07-05-aws-linear-learner-apache-mxnet-python/additional-params.json

extracting: datasets/2022-07-05-aws-linear-learner-apache-mxnet-python/mx-mod-symbol.json

extracting: datasets/2022-07-05-aws-linear-learner-apache-mxnet-python/manifest.json

extracting: datasets/2022-07-05-aws-linear-learner-apache-mxnet-python/mx-mod-0000.params Extracted model has two important files. * mx-mod-symbol.json is the JSON file that defines the computational graph for the model * mx-mod-0000.params is a binary file that contains the parameters for the trained model

Serializing models as JSON files has the benefit that these models can be loaded from other language bindings like C++ or Scala for faster inference or inference in different environments. You can read more about it here: Saving and Loading Gluon Models.

Gluon API is a wrapper around low level MXNet API to provide a simple interface for deep learning. You may read more about this API here: mxnet.gluon

Let’s read model computational graph.

{'arg_nodes': [0, 1, 3, 5],

'attrs': {'mxnet_version': ['int', 10301]},

'heads': [[6, 0, 0]],

'node_row_ptr': [0, 1, 2, 3, 4, 5, 6, 7],

'nodes': [{'inputs': [], 'name': 'data', 'op': 'null'},

{'attrs': {'__shape__': '(12, 1)'},

'inputs': [],

'name': 'fc0_weight',

'op': 'null'},

{'inputs': [[0, 0, 0], [1, 0, 0]], 'name': 'dot46', 'op': 'dot'},

{'attrs': {'__lr_mult__': '10.0', '__shape__': '(1, 1)'},

'inputs': [],

'name': 'fc0_bias',

'op': 'null'},

{'inputs': [[2, 0, 0], [3, 0, 0]],

'name': 'broadcast_plus46',

'op': 'broadcast_add'},

{'inputs': [], 'name': 'out_label', 'op': 'null'},

{'inputs': [[4, 0, 0], [5, 0, 0]],

'name': 'linearregressionoutput46',

'op': 'LinearRegressionOutput'}]}##

# initialize the model graph

model = gluon.nn.SymbolBlock(

outputs=mxnet.sym.load_json(sym_json_string),

inputs=mxnet.sym.var("data")

)/usr/local/lib/python3.8/dist-packages/mxnet/gluon/block.py:1849: UserWarning: Cannot decide type for the following arguments. Consider providing them as input:

data: None

input_sym_arg_type = in_param.infer_type()[0]/usr/local/lib/python3.8/dist-packages/mxnet/gluon/parameter.py:896: UserWarning: Parameter 'fc0_weight' is already initialized, ignoring. Set force_reinit=True to re-initialize.

v.initialize(None, ctx, init, force_reinit=force_reinit)

/usr/local/lib/python3.8/dist-packages/mxnet/gluon/parameter.py:896: UserWarning: Parameter 'fc0_bias' is already initialized, ignoring. Set force_reinit=True to re-initialize.

v.initialize(None, ctx, init, force_reinit=force_reinit)At this point our model is ready in our local environment, and we can use it to make some predictions.

Let’s prepare an input request. This request is same as used in the model training blog post.

We need to convert our request Python list to MXNet array to be used for inference.

print(f"type(input_request): {type(input_request)}")

print(f"type(input_request_nd): {type(input_request_nd)}")type(input_request): <class 'list'>

type(input_request_nd): <class 'mxnet.ndarray.ndarray.NDArray'>Let’s pass our converted request to model for inference.

Extension horovod.torch has not been built: /usr/local/lib/python3.8/dist-packages/horovod/torch/mpi_lib/_mpi_lib.cpython-38-x86_64-linux-gnu.so not found

If this is not expected, reinstall Horovod with HOROVOD_WITH_PYTORCH=1 to debug the build error.

Warning! MPI libs are missing, but python applications are still avaiable.

[2022-07-05 10:53:31.777 mxnet-1-9-cpu-py38-ub-ml-t3-medium-3179f602905714e1b45dfa06b970:222 INFO utils.py:27] RULE_JOB_STOP_SIGNAL_FILENAME: None

[2022-07-05 10:53:31.944 mxnet-1-9-cpu-py38-ub-ml-t3-medium-3179f602905714e1b45dfa06b970:222 INFO profiler_config_parser.py:111] Unable to find config at /opt/ml/input/config/profilerconfig.json. Profiler is disabled.29.986717That’s it. We have loaded SageMaker built-in model in our local envionment and have done prediction from it. But we can go one step further and explore this model’s trained parameters.

(

Parameter fc0_weight (shape=(12, 1), dtype=<class 'numpy.float32'>)

Parameter fc0_bias (shape=(1, 1), dtype=<class 'numpy.float32'>)

Parameter out_label (shape=(1,), dtype=<class 'numpy.float32'>)

)Let’s define a function to extract model’s weights and biases

def extract_weight_and_bias(model):

params = model.collect_params()

weight = params["fc0_weight"].data().asnumpy()

bias = params["fc0_bias"].data()[0].asscalar()

return {"weight": weight, "bias": bias}

weight_and_bias = extract_weight_and_bias(model)

weight_and_bias{'weight': array([[-1.6160294e-01],

[ 5.2438524e-02],

[ 1.5013154e-02],

[-4.4300285e-01],

[-2.0226759e+01],

[ 3.2423832e+00],

[ 7.3540364e-03],

[-1.4330027e+00],

[ 2.0710023e-01],

[-8.0383439e-03],

[-1.0465978e+00],

[-5.0012934e-01]], dtype=float32),

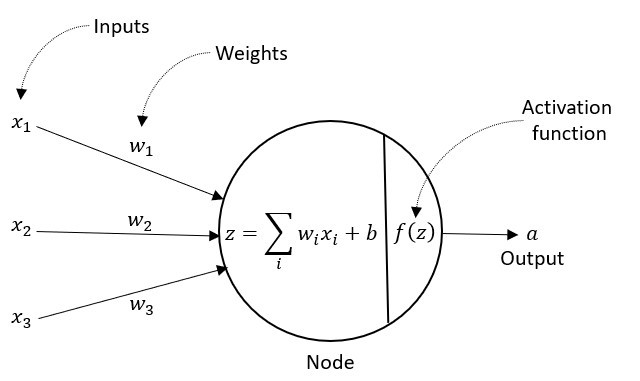

'bias': 44.62983}This shows that model has 12 weights, one for each input parameter, and a bias. For linear learner there is no activation function so we can use summation formula to create a prediction using the provided weights and bais.

We have all the ingredients ready. Let’s use them to calcualte the prediction ourselves.